A hybrid approach for real-time hand tracking using fiducial markers and inertial sensors

IF 1.9

Q2 MULTIDISCIPLINARY SCIENCES

引用次数: 0

Abstract

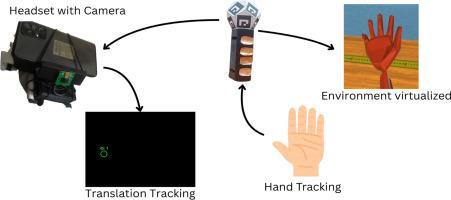

This paper presents a cost-effective hybrid hand-tracking technique that integrates fiducial marker detection, capacitive touch sensing, and inertial measurement for real-time gesture recognition in immersive environments. The system is implemented on lightweight hardware comprising a Raspberry Pi Zero 2 W and an ESP32, with OpenCV’s ArUco marker detection enabling 3D hand pose estimation, capacitive sensors supporting finger-state recognition, and an Inertial Measurement Unit (IMU) providing orientation tracking. Optimizations such as exposure adjustment and region-of-interest processing ensure robust marker detection under variable illumination, while sensor data is transmitted via Bluetooth Low Energy (BLE) and WebSocket protocols for synchronization with external devices.

The methodological novelty of this work is highlighted as follows:

•High Accuracy Across Modalities: Achieved 3.4 mm localization accuracy, 85–91% orientation accuracy, and ∼2.9 mm hand pose keypoint accuracy, with trajectory fidelity maintained at 80–81%.

•Robust Finger-State Recognition: The capacitive sensing module consistently delivered 96.1% accuracy in detecting finger states across multiple runs.

•Validated Communication Trade-offs: Latency testing established complementary roles of Wi-Fi (high throughput, ∼467 msg/s) and BLE (low latency, ∼50 ms, >98% reliability) for real-time applications.

By fusing multiple sensing modalities, the method delivers enhanced accuracy, responsiveness, and stability while minimizing computational overhead. The system provides a reproducible, modular, and scalable solution suitable for VR/AR interaction, assistive technology, education, and human–computer interaction.

一种基于基准标记和惯性传感器的手部实时跟踪混合方法

本文提出了一种具有成本效益的混合手跟踪技术,该技术集成了基准标记检测,电容触摸传感和惯性测量,用于沉浸式环境中的实时手势识别。该系统在轻量级硬件上实现,包括Raspberry Pi Zero 2w和ESP32, OpenCV的ArUco标记检测支持3D手部姿态估计,电容式传感器支持手指状态识别,惯性测量单元(IMU)提供方向跟踪。曝光调整和感兴趣区域处理等优化确保了在可变照明下稳健的标记检测,而传感器数据通过蓝牙低功耗(BLE)和WebSocket协议传输,以与外部设备同步。•跨模态高精度:实现3.4 mm定位精度,85-91%定向精度和~ 2.9 mm手位关键点精度,轨迹保真度保持在80-81%。•强大的手指状态识别:电容式传感模块在多次运行中检测手指状态时始终提供96.1%的准确率。•经过验证的通信权衡:延迟测试为实时应用建立了Wi-Fi(高吞吐量,~ 467 msg/s)和BLE(低延迟,~ 50 ms, >;98%可靠性)的互补作用。通过融合多种传感模式,该方法提供了更高的准确性、响应性和稳定性,同时最大限度地减少了计算开销。该系统提供了一个可复制的、模块化的、可扩展的解决方案,适用于VR/AR交互、辅助技术、教育和人机交互。

本文章由计算机程序翻译,如有差异,请以英文原文为准。

求助全文

约1分钟内获得全文

求助全文

来源期刊

MethodsX

Health Professions-Medical Laboratory Technology

CiteScore

3.60

自引率

5.30%

发文量

314

审稿时长

7 weeks

期刊介绍:

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: