EyeMap: A fusion-based method for eye movement-based visual attention maps as predictive markers of parkinsonism

IF 1.9

Q2 MULTIDISCIPLINARY SCIENCES

引用次数: 0

Abstract

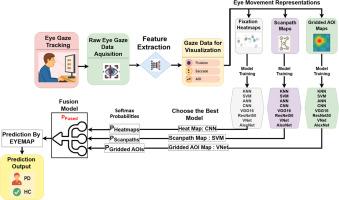

EyeMap is a method for visualizing and classifying eye movement patterns using scanpaths, fixation heatmaps, and gridded Areas of Interest (AOIs). EyeMap combines predictions from modality-specific machine learning and deep learning models using a late-fusion technique to produce interpretable gaze representations. By collecting spatial, temporal, and regional elements of gaze data, the method enhances diagnostic interpretability and enables the detection of Parkinsonian symptoms. This method provides complementary perspectives on gaze behavior, encompassing spatial focus, temporal scan order, and attention allocation across regions of interest. A dataset consisting of visualizations of organized visual tasks completed by both PD patients and healthy controls is created to support the development and validation of this method. EyeMap shows that vision-driven models may detect PD-specific gaze anomalies without the need for manual feature engineering. All implementation steps, from data acquisition to model fusion, are fully described to enable reproducibility and potential adaptation to other gaze-based analysis contexts.

- 1.A structured method was developed to visualize eye-tracking data in three distinct formats

- 2.Classification outputs from separate gaze visualizations were combined using softmax-level fusion

- 3.A new eye-tracking dataset was generated to support method development and reproducibility

EyeMap:一种基于融合的方法,以眼动为基础的视觉注意图作为帕金森病的预测标记

EyeMap是一种使用扫描路径、注视热图和兴趣网格区域(AOIs)对眼球运动模式进行可视化和分类的方法。EyeMap结合了来自特定模式的机器学习和深度学习模型的预测,使用后期融合技术来产生可解释的凝视表示。通过收集注视数据的空间、时间和区域元素,该方法提高了诊断的可解释性,并使帕金森症状的检测成为可能。该方法提供了凝视行为的互补视角,包括空间焦点、时间扫描顺序和兴趣区域间的注意力分配。创建了一个由PD患者和健康对照者完成的有组织的视觉任务的可视化组成的数据集,以支持该方法的开发和验证。EyeMap显示,视觉驱动模型可以检测pd特定的凝视异常,而无需手动特征工程。所有的实现步骤,从数据采集到模型融合,都有完整的描述,以实现可重复性和潜在的适应其他基于注视的分析环境。开发了一种结构化的方法,以三种不同的格式可视化眼动追踪数据2。使用softmax-level融合将来自不同凝视可视化的分类输出组合在一起。生成了一个新的眼动追踪数据集,以支持方法的开发和可重复性

本文章由计算机程序翻译,如有差异,请以英文原文为准。

求助全文

约1分钟内获得全文

求助全文

来源期刊

MethodsX

Health Professions-Medical Laboratory Technology

CiteScore

3.60

自引率

5.30%

发文量

314

审稿时长

7 weeks

期刊介绍:

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: