Towards instructional collaborative robots: From video-based learning to feedback-adapted instruction

IF 14.2

1区 工程技术

Q1 ENGINEERING, INDUSTRIAL

引用次数: 0

Abstract

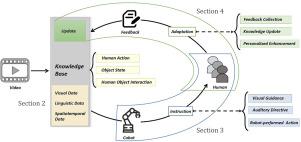

Collaborative robots (cobots) enhanced by artificial intelligence (AI) are enabling intelligent, human-centric manufacturing environments. These dynamic settings require cobots with cognitive intelligence, i.e., capabilities covering perception, learning, decision-making, and adaptation. Such intelligence enables proactive collaboration that integrates bidirectional instructional and cooperative competencies. However, while extensive research has focused on improving the performance of robot collaborative skills, systematic investigations into the instructional capabilities of cobots remain notably limited. To lay the technological foundation for addressing this gap, this survey adopts a multimodal perspective to review three essential aspects of this field: (1) robot learning from video (LfV) for instructional capabilities acquisition, (2) robot-guided instruction methodologies, and (3) feedback-driven adaptation. We present a systematic review of technologies for representing human actions, object states, and human-object interactions (HOI), with a particular focus on multimodal data sources from video. Furthermore, we analyze diverse instructional strategies, including visual guidance, auditory directives, robot-performed actions, emphasizing their effectiveness in robot-guided instruction. A significant focus is placed on feedback-driven adaptation mechanisms, which enable cobots to dynamically refine their instructional capabilities based on user feedback. We identify key challenges such as environmental complexity, user variability, real-time processing constraints, and trust-building requirements, while also highlighting emerging opportunities in multimodal integration, AI-powered robots, and collaborative learning systems. Finally, we underscore the transformative potential of instructional cobots in smart manufacturing and emphasize the necessity for further research.

迈向教学协作机器人:从基于视频的学习到反馈适应的教学

由人工智能(AI)增强的协作机器人(cobots)正在实现以人为中心的智能制造环境。这些动态设置需要具有认知智能的协作机器人,即涵盖感知、学习、决策和适应的能力。这种智能使集成了双向教学和合作能力的主动协作成为可能。然而,尽管广泛的研究集中在提高机器人协作技能的表现上,但对协作机器人教学能力的系统调查仍然非常有限。为了为解决这一差距奠定技术基础,本调查采用多模态视角回顾了该领域的三个基本方面:(1)机器人视频学习(LfV)用于教学能力获取,(2)机器人引导的教学方法,(3)反馈驱动的适应。我们对表示人类行为、对象状态和人-对象交互(HOI)的技术进行了系统回顾,特别关注来自视频的多模态数据源。此外,我们分析了不同的教学策略,包括视觉指导、听觉指令、机器人执行的动作,强调了它们在机器人指导教学中的有效性。一个重要的焦点放在反馈驱动的适应机制上,它使协作机器人能够根据用户反馈动态地改进它们的教学能力。我们确定了关键挑战,如环境复杂性、用户可变性、实时处理约束和信任建立要求,同时也强调了多模式集成、人工智能机器人和协作学习系统中的新兴机遇。最后,我们强调了教学协作机器人在智能制造中的变革潜力,并强调了进一步研究的必要性。

本文章由计算机程序翻译,如有差异,请以英文原文为准。

求助全文

约1分钟内获得全文

求助全文

来源期刊

Journal of Manufacturing Systems

工程技术-工程:工业

CiteScore

23.30

自引率

13.20%

发文量

216

审稿时长

25 days

期刊介绍:

The Journal of Manufacturing Systems is dedicated to showcasing cutting-edge fundamental and applied research in manufacturing at the systems level. Encompassing products, equipment, people, information, control, and support functions, manufacturing systems play a pivotal role in the economical and competitive development, production, delivery, and total lifecycle of products, meeting market and societal needs.

With a commitment to publishing archival scholarly literature, the journal strives to advance the state of the art in manufacturing systems and foster innovation in crafting efficient, robust, and sustainable manufacturing systems. The focus extends from equipment-level considerations to the broader scope of the extended enterprise. The Journal welcomes research addressing challenges across various scales, including nano, micro, and macro-scale manufacturing, and spanning diverse sectors such as aerospace, automotive, energy, and medical device manufacturing.

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: