Low-depth quantum approximate optimization algorithm for maximum likelihood detection in massive MIMO

Abstract

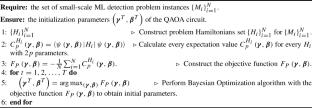

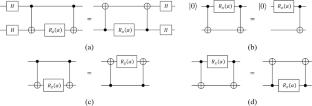

In massive multiple-input and multiple-output (MIMO) systems, the maximum likelihood (ML) detection, which can be transformed into a combinatorial optimization problem, is NP-hard and becomes more complex when the number of antennas and symbols increases. The quantum approximate optimization algorithm (QAOA) is a hybrid quantum-classical algorithm and has shown great advantages in approximately solving combinatorial optimization problems. This paper proposes a comprehensive QAOA-based ML detection scheme for binary symbols. As solving small-scale problems with the sparse channel matrices requires using only a 1-level QAOA, we derive a universal and concise analytical expression for the 1-level QAOA expectation in the proposed framework. This advancement helps analyze solutions to small-scale problems. For large-scale problems requiring more than 1-level QAOA, we introduce the CNOT gate elimination and circuit parallelization algorithm to decrease the number of error-prone CNOT gates and circuit depth and thus reduce the noise effect. We also propose a Bayesian optimization-based parameters initialization algorithm to obtain initial parameters of large-scale QAOA from small-scale and classical instances, increasing the likelihood of identifying the precise solution. In numerical experiments, we demonstrate resistance to noise by evaluating the bit error rate (BER). The result shows that the performance of our QAOA-based ML detector has improved significantly. The proposed scheme also shows significant advantages in both parameter convergence and the minimum convergence value from the convergence curves of the loss function.

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: