A dual-stream parallel architecture for robust visual tracking using scale-aware region proposals

IF 6.2

2区 计算机科学

Q1 COMPUTER SCIENCE, THEORY & METHODS

Future Generation Computer Systems-The International Journal of Escience

Pub Date : 2025-08-14

DOI:10.1016/j.future.2025.108079

引用次数: 0

Abstract

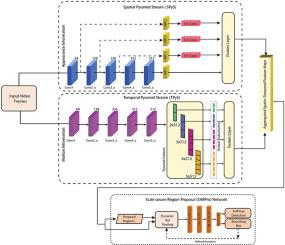

Visual tracking in dynamic environments faces significant challenges such as occlusions, scale variations, and abrupt motion changes, particularly in traffic scenarios. Tracking multi-scale objects and maintaining the temporal correlations across video sequences is essential for accurate tracking. These challenges motivated us to present a novel method that captures long-term dependencies in motion cues using a scale-aware region proposal (SARPro) network that uses a Faster R-CNN pipeline to predict high-quality region proposals for effective multi-scale video object detection and tracking (VODT). The proposed method uses robust feature extraction through a dual-stream feature pyramid network (DS-FPN) that captures spatial and temporal patterns. The SARPro generates precise bounding box proposals, addressing object scale variations. An iterative approach incorporating an LSTM fine-tunes the bounding boxes. A low-confidence track filter (LCTFilter) is integrated into the DeepSORT tracking algorithm to filter out the least confident tracks. The SARPro is designed to operate within multi-threaded parallel computing with GPU acceleration (MTPC-GPU) to optimize simultaneous detection and tracking. Experiments on benchmark datasets demonstrate that SARPro significantly enhances accuracy, achieving robust detection and tracking of small objects in complex video sequences while ensuring real-time performance. SARPro attains mAP scores of 91.35 % with 57.2 FPS on UA-DETRAC and 88.57 % with 41.9 FPS on BDD100K datasets.

提出了一种利用尺度感知区域实现鲁棒视觉跟踪的双流并行架构

动态环境中的视觉跟踪面临着巨大的挑战,如遮挡、尺度变化和突然的运动变化,特别是在交通场景中。跟踪多尺度目标并保持视频序列间的时间相关性是精确跟踪的关键。这些挑战促使我们提出了一种新的方法,该方法使用尺度感知区域建议(SARPro)网络捕获运动线索中的长期依赖关系,该网络使用更快的R-CNN管道来预测高质量的区域建议,以实现有效的多尺度视频目标检测和跟踪(VODT)。该方法通过双流特征金字塔网络(DS-FPN)进行鲁棒特征提取,捕获空间和时间模式。SARPro生成精确的边界框建议,处理对象规模的变化。结合LSTM的迭代方法对边界框进行微调。将低置信度航迹滤波器(LCTFilter)集成到DeepSORT跟踪算法中,过滤掉最不置信度的航迹。SARPro设计用于在多线程并行计算中使用GPU加速(MTPC-GPU),以优化同步检测和跟踪。在基准数据集上的实验表明,SARPro显著提高了精度,在保证实时性的同时,实现了复杂视频序列中小目标的鲁棒检测和跟踪。SARPro在UA-DETRAC数据集上的mAP得分为91.35%,FPS为57.2;在BDD100K数据集上的mAP得分为88.57%,FPS为41.9。

本文章由计算机程序翻译,如有差异,请以英文原文为准。

求助全文

约1分钟内获得全文

求助全文

来源期刊

CiteScore

19.90

自引率

2.70%

发文量

376

审稿时长

10.6 months

期刊介绍:

Computing infrastructures and systems are constantly evolving, resulting in increasingly complex and collaborative scientific applications. To cope with these advancements, there is a growing need for collaborative tools that can effectively map, control, and execute these applications.

Furthermore, with the explosion of Big Data, there is a requirement for innovative methods and infrastructures to collect, analyze, and derive meaningful insights from the vast amount of data generated. This necessitates the integration of computational and storage capabilities, databases, sensors, and human collaboration.

Future Generation Computer Systems aims to pioneer advancements in distributed systems, collaborative environments, high-performance computing, and Big Data analytics. It strives to stay at the forefront of developments in grids, clouds, and the Internet of Things (IoT) to effectively address the challenges posed by these wide-area, fully distributed sensing and computing systems.

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: