Augmented robustness in home demand prediction: Integrating statistical loss function with enhanced cross-validation in machine learning hyperparameter optimisation

IF 9.6

Q1 COMPUTER SCIENCE, ARTIFICIAL INTELLIGENCE

引用次数: 0

Abstract

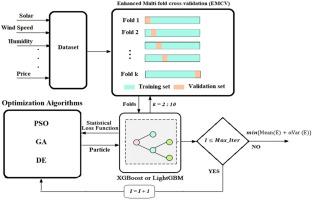

Sustainable forecasting of home energy demand (SFHED) is crucial for promoting energy efficiency, minimizing environmental impact, and optimizing resource allocation. Machine learning (ML) supports SFHED by identifying patterns and forecasting demand. However, conventional hyperparameter tuning methods often rely solely on minimizing average prediction errors, typically through fixed k-fold cross-validation, which overlooks error variability and limits model robustness. To address this limitation, we propose the Optimized Robust Hyperparameter Tuning for Machine Learning with Enhanced Multi-fold Cross-Validation (ORHT-ML-EMCV) framework. This method integrates statistical analysis of k-fold validation errors by incorporating their mean and variance into the optimization objective, enhancing robustness and generalizability. A weighting factor is introduced to balance accuracy and robustness, and its impact is evaluated across a range of values. A novel Enhanced Multi-Fold Cross-Validation (EMCV) technique is employed to automatically evaluate model performance across varying fold configurations without requiring a predefined k value, thereby reducing sensitivity to data splits. Using three evolutionary algorithms Genetic Algorithm (GA), Particle Swarm Optimization (PSO), and Differential Evolution (DE) we optimize two ensemble models: XGBoost and LightGBM. The optimization process minimizes both mean error and variance, with robustness assessed through cumulative distribution function (CDF) analyses. Experiments on three real-world residential datasets show the proposed method reduces worst-case Root Mean Square Error (RMSE) by up to 19.8% and narrows confidence intervals by up to 25%. Cross-household validations confirm strong generalization, achieving coefficient of determination (R²) of 0.946 and 0.972 on unseen homes. The framework offers a statistically grounded and efficient solution for robust energy forecasting.

家庭需求预测的增强鲁棒性:在机器学习超参数优化中集成统计损失函数与增强交叉验证

家庭能源需求的可持续预测(SFHED)对于提高能源效率、减少环境影响和优化资源配置至关重要。机器学习(ML)通过识别模式和预测需求来支持SFHED。然而,传统的超参数调整方法通常仅依赖于最小化平均预测误差,通常通过固定的k-fold交叉验证,这忽略了误差可变性并限制了模型的鲁棒性。为了解决这一限制,我们提出了基于增强多重交叉验证(ORHT-ML-EMCV)框架的机器学习优化鲁棒超参数调优。该方法结合k-fold验证误差的统计分析,将其均值和方差纳入优化目标,增强了鲁棒性和泛化性。引入加权因子来平衡准确性和稳健性,并在一系列值中评估其影响。采用一种新型的增强多重交叉验证(EMCV)技术,在不需要预定义k值的情况下自动评估不同折叠配置的模型性能,从而降低对数据分裂的敏感性。采用遗传算法(GA)、粒子群算法(PSO)和差分进化算法(DE)对XGBoost和LightGBM两种集成模型进行了优化。优化过程使平均误差和方差最小化,并通过累积分布函数(CDF)分析评估稳健性。在三个真实住宅数据集上的实验表明,该方法将最坏情况的均方根误差(RMSE)降低了19.8%,并将置信区间缩小了25%。跨户验证证实了较强的通用性,未见房屋的决定系数(R²)分别为0.946和0.972。该框架为稳健的能源预测提供了基于统计的高效解决方案。

本文章由计算机程序翻译,如有差异,请以英文原文为准。

求助全文

约1分钟内获得全文

求助全文

来源期刊

Energy and AI

Engineering-Engineering (miscellaneous)

CiteScore

16.50

自引率

0.00%

发文量

64

审稿时长

56 days

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: