GradCAM-PestDetNet: A deep learning-based hybrid model with explainable AI for pest detection and classification

IF 1.9

Q2 MULTIDISCIPLINARY SCIENCES

引用次数: 0

Abstract

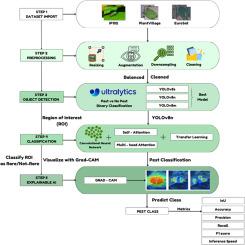

Pest detection is crucial for both agriculture and ecology. The growing global population demands an efficient pest detection system to ensure food security. Pests threaten agricultural productivity, sustainability, and economic development. They also cause damage to machinery, equipment and soil, making effective detection essential for commercial benefits. Traditional pest detection methods are often slow, less accurate and reliant on expert knowledge. With advancements in computer vision and AI, deep transfer learning models (DTLMs) have emerged as powerful solutions. The GradCAM-PestDetNet methodology utilizes object detection models like YOLOv8m, YOLOv8s and YOLOv8n, alongside transfer learning techniques such as VGG16, ResNet50, EfficientNetB0, MobileNetV2, InceptionV3 and DenseNet121 for feature extraction. Additionally, Vision Transformers (ViT) and Swim Transformers were explored for their ability to process complex data patterns. To enhance model interpretability, GradCAM-PestDetNet integrates Gradient-weighted Class Activation Mapping (Grad-CAM), allowing better visualization of model predictions.

- •Uses YOLOv8 models (YOLOv8n for fastest inference at 1.86 ms/img) and transfer learning for pest detection ensuring that the system is viable for low-resource environments.

- •Employs an ensemble model (ResNet50, DenseNet, MobileNet) that achieved 67.07 % accuracy, 66.3 % F1-score and 68.1 % recall. This is an improvement over the baseline CNN which gave an accuracy of 21.5 %. This ensures a more generalized and robust model that is not biased towards the majority class.

- •Integrates Grad-CAM for improved interpretability in pest detection.

GradCAM-PestDetNet:一个基于深度学习的混合模型,具有可解释的人工智能,用于害虫检测和分类

有害生物检测对农业和生态都至关重要。不断增长的全球人口需要一个有效的害虫检测系统来确保粮食安全。害虫威胁着农业生产力、可持续性和经济发展。它们还会对机器、设备和土壤造成破坏,因此有效的检测对商业利益至关重要。传统的害虫检测方法通常速度慢,准确性低,并且依赖于专家知识。随着计算机视觉和人工智能的进步,深度迁移学习模型(dtlm)已经成为强大的解决方案。GradCAM-PestDetNet方法利用对象检测模型,如YOLOv8m, YOLOv8s和YOLOv8n,以及迁移学习技术,如VGG16, ResNet50, EfficientNetB0, MobileNetV2, InceptionV3和DenseNet121进行特征提取。此外,我们还研究了Vision transformer (ViT)和Swim transformer处理复杂数据模式的能力。为了提高模型的可解释性,GradCAM-PestDetNet集成了梯度加权类激活映射(gradcam),允许更好地可视化模型预测。•使用YOLOv8模型(YOLOv8n的最快推理速度为1.86 ms/img)和迁移学习进行害虫检测,确保系统适用于低资源环境。•采用集成模型(ResNet50, DenseNet, MobileNet),达到67.07%的准确率,66.3%的f1得分和68.1%的召回率。这比基线CNN的准确率提高了21.5%。这确保了一个更加一般化和健壮的模型,而不是偏向于大多数阶级。•集成Grad-CAM,提高害虫检测的可解释性。

本文章由计算机程序翻译,如有差异,请以英文原文为准。

求助全文

约1分钟内获得全文

求助全文

来源期刊

MethodsX

Health Professions-Medical Laboratory Technology

CiteScore

3.60

自引率

5.30%

发文量

314

审稿时长

7 weeks

期刊介绍:

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: