Empowering cardiovascular diagnostics with SET-MobileNet: A lightweight and accurate deep learning based classification approach

IF 4.2

3区 计算机科学

Q2 COMPUTER SCIENCE, ARTIFICIAL INTELLIGENCE

引用次数: 0

Abstract

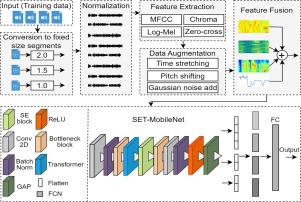

Cardiovascular diseases (CVDs) remain the leading cause of mortality worldwide, necessitating early detection and accurate diagnosis for improved patient outcomes. This study introduces SET-MobileNet, a lightweight deep learning model designed for automated heart sound classification, integrating transformers to capture long-range dependencies and squeeze-and-excitation (SE) blocks to emphasize relevant acoustic features while suppressing noise artifacts. Unlike traditional methods that rely on handcrafted features, SET-MobileNet employs a multimodal feature extraction approach, incorporating log-mel spectrograms, Mel-Frequency Cepstral Coefficients (MFCCs), chroma features, and zero-crossing rates to enhance classification robustness. The model is evaluated across multiple publicly available heart sound datasets, including CirCor, HSS, GitHub, and Heartbeat Sounds, achieving a state-of-the-art accuracy of 99.95% for 2.0-second heart sound segments in the CirCor dataset. Extensive experiments demonstrate that multimodal feature representations significantly improve classification performance by capturing both time-frequency and spectral characteristics of heart sounds. SET-MobileNet is computationally efficient, with a model size of 8.61 MB and single-sample inference times under 6.5 ms, making it suitable for real-time deployment on mobile and embedded devices. Ablation studies confirm the contributions of transformers and SE blocks, showing incremental improvements in accuracy and noise suppression.

通过SET-MobileNet增强心血管诊断能力:一种轻量级和准确的基于深度学习的分类方法

心血管疾病(cvd)仍然是世界范围内死亡的主要原因,需要早期发现和准确诊断以改善患者的预后。这项研究引入了SET-MobileNet,这是一种轻量级的深度学习模型,专为自动心音分类而设计,集成变压器以捕获远程依赖关系和挤压激励(SE)块,以强调相关的声学特征,同时抑制噪声工件。与依赖手工特征的传统方法不同,SET-MobileNet采用多模态特征提取方法,结合对数谱图、梅尔频率倒谱系数(MFCCs)、色度特征和零交叉率来增强分类稳健性。该模型在多个公开可用的心音数据集(包括CirCor、HSS、GitHub和Heartbeat Sounds)上进行了评估,在CirCor数据集中对2.0秒心音片段实现了99.95%的最先进精度。大量的实验表明,多模态特征表示通过捕获心音的时频和频谱特征,显著提高了分类性能。SET-MobileNet计算效率高,模型大小为8.61 MB,单样本推断时间低于6.5 ms,适合在移动和嵌入式设备上实时部署。烧蚀研究证实了变压器和SE块的贡献,显示出精度和噪声抑制的逐步改善。

本文章由计算机程序翻译,如有差异,请以英文原文为准。

求助全文

约1分钟内获得全文

求助全文

来源期刊

Image and Vision Computing

工程技术-工程:电子与电气

CiteScore

8.50

自引率

8.50%

发文量

143

审稿时长

7.8 months

期刊介绍:

Image and Vision Computing has as a primary aim the provision of an effective medium of interchange for the results of high quality theoretical and applied research fundamental to all aspects of image interpretation and computer vision. The journal publishes work that proposes new image interpretation and computer vision methodology or addresses the application of such methods to real world scenes. It seeks to strengthen a deeper understanding in the discipline by encouraging the quantitative comparison and performance evaluation of the proposed methodology. The coverage includes: image interpretation, scene modelling, object recognition and tracking, shape analysis, monitoring and surveillance, active vision and robotic systems, SLAM, biologically-inspired computer vision, motion analysis, stereo vision, document image understanding, character and handwritten text recognition, face and gesture recognition, biometrics, vision-based human-computer interaction, human activity and behavior understanding, data fusion from multiple sensor inputs, image databases.

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: