ChatGPT-4o Compared With Human Researchers in Writing Plain-Language Summaries for Cochrane Reviews: A Blinded, Randomized Non-Inferiority Controlled Trial

Abstract

Introduction

Plain language summaries in Cochrane reviews are designed to present key information in a way that is understandable to individuals without a medical background. Despite Cochrane's author guidelines, these summaries often fail to achieve their intended purpose. Studies show that they are generally difficult to read and vary in their adherence to the guidelines. Artificial intelligence is increasingly used in medicine and academia, with its potential being tested in various roles. This study aimed to investigate whether ChatGPT-4o could produce plain language summaries that are as good as the already published plain language summaries in Cochrane reviews.

Methods

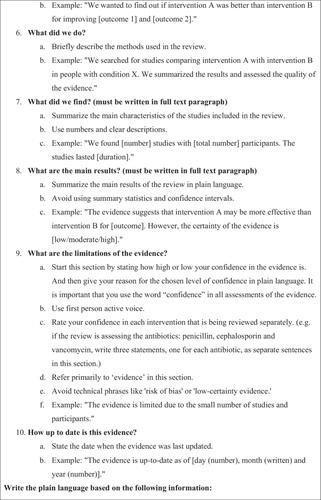

We conducted a randomized, single-blinded study with a total of 36 plain language summaries: 18 human written and 18 ChatGPT-4o generated summaries where both versions were for the same Cochrane reviews. The sample size was calculated to be 36 and each summary was evaluated four times. Each summary was reviewed twice by members of a Cochrane editorial group and twice by laypersons. The summaries were assessed in three different ways: First, all assessors evaluated the summaries for informativeness, readability, and level of detail using a Likert scale from 1 to 10. They were also asked whether they would submit the summary and whether they could identify who had written it. Second, members of a Cochrane editorial group assessed the summaries using a checklist based on Cochrane's guidelines for plain language summaries, with scores ranging from 0 to 10. Finally, the readability of the summaries was analyzed using objective tools such as Lix and Flesch-Kincaid scores. Randomization and allocation to either ChatGPT-4o or human written summaries were conducted using random.org's random sequence generator, and assessors were blinded to the authorship of the summaries.

Results

The plain language summaries generated by ChatGPT-4o scored 1 point higher on information (p < .001) and level of detail (p = .004), and 2 points higher on readability (p = .002) compared to human written summaries. Lix and Flesch-Kincaid scores were high for both groups of summaries, though ChatGPT was slightly easier to read (p < .001). Assessors found it difficult to distinguish between ChatGPT and human written summaries, with only 20% correctly identifying ChatGPT generated text. ChatGPT summaries were preferred for submission compared to the human written summaries (64% vs. 36%, p < .001).

Conclusion

ChatGPT-4o shows promise in creating plain language summaries for Cochrane reviews at least as well as humans and in some cases slightly better. This study suggests ChatGPT-4o's could become a tool for drafting easy-to-understand plain language summaries for Cochrane reviews with a quality approaching or matching human authors.

Clinical Trial Registration and Protocol

Available at https://osf.io/aq6r5.

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: