A large-scale replication of scenario-based experiments in psychology and management using large language models

IF 18.3

Q1 COMPUTER SCIENCE, INTERDISCIPLINARY APPLICATIONS

引用次数: 0

Abstract

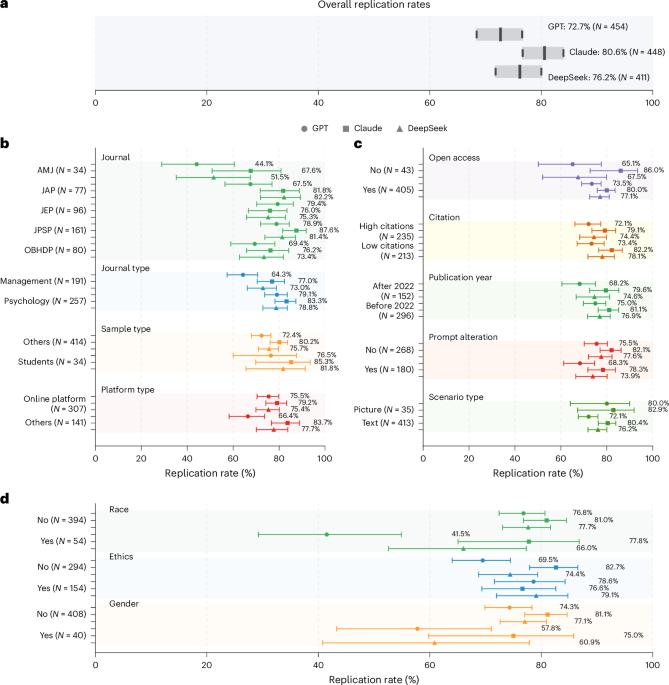

We conducted a large-scale study replicating 156 psychological experiments from top social science journals using three state-of-the-art large language models (LLMs). Our results reveal that, while LLMs demonstrated high replication rates for main effects (73–81%) and moderate to strong success with interaction effects (46–63%), they consistently produced larger effect sizes than human studies. Notably, LLMs showed significantly lower replication rates for studies involving socially sensitive topics such as race, gender and ethics. When original studies reported null findings, LLMs produced significant results at remarkably high rates (68–83%); while this could reflect cleaner data with less noise, it also suggests potential risks of effect size overestimation. Our results demonstrate both the promises and the challenges of LLMs in psychological research: while LLMs are efficient tools for pilot testing and rapid hypothesis validation, enriching rather than replacing traditional human-participant studies, they require more nuanced interpretation and human validation for complex social phenomena and culturally sensitive research questions. Researchers replicated 156 psychological experiments using three large language models (LLMs) instead of human participants. LLMs achieved 73–81% replication rates but showed amplified effect sizes and challenges with socially sensitive topics.

使用大型语言模型在心理学和管理学中大规模复制基于场景的实验。

我们使用三种最先进的大型语言模型(llm)进行了一项大规模研究,复制了顶级社会科学期刊上的156个心理实验。我们的研究结果显示,虽然llm在主效应(73-81%)和交互效应(46-63%)上表现出很高的复制率,但它们始终比人类研究产生更大的效应量。值得注意的是,法学硕士在涉及种族、性别和道德等社会敏感话题的研究中显示出明显较低的复制率。当原始研究报告无效结果时,llm产生显著结果的比率非常高(68-83%);虽然这可能反映出数据更清晰,噪音更小,但它也表明了效应大小高估的潜在风险。我们的研究结果显示了法学硕士在心理学研究中的前景和挑战:虽然法学硕士是试点测试和快速假设验证的有效工具,丰富而不是取代传统的人类参与者研究,但它们需要对复杂的社会现象和文化敏感的研究问题进行更细致的解释和人类验证。

本文章由计算机程序翻译,如有差异,请以英文原文为准。

求助全文

约1分钟内获得全文

求助全文

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: