Predictive Coding Model Detects Novelty on Different Levels of Representation Hierarchy

IF 2.1

4区 计算机科学

Q3 COMPUTER SCIENCE, ARTIFICIAL INTELLIGENCE

引用次数: 0

Abstract

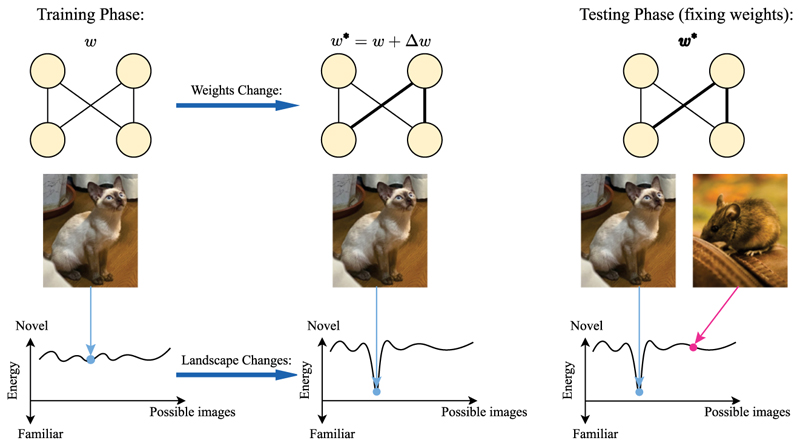

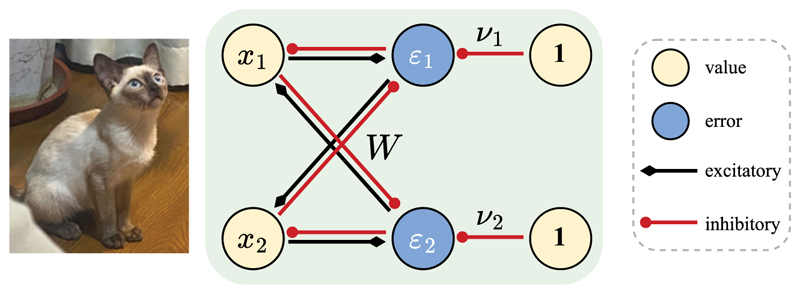

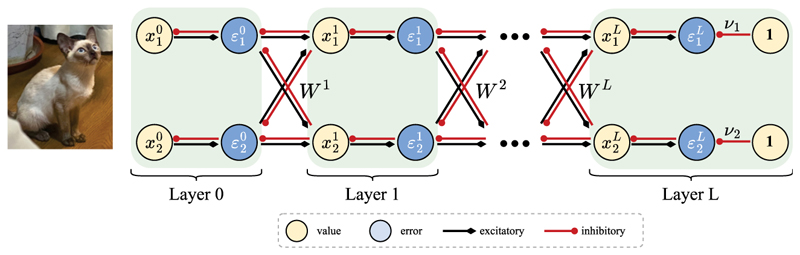

Novelty detection, also known as familiarity discrimination or recognition memory, refers to the ability to distinguish whether a stimulus has been seen before. It has been hypothesized that novelty detection can naturally arise within networks that store memory or learn efficient neural representation because these networks already store information on familiar stimuli. However, existing computational models supporting this idea have yet to reproduce the high capacity of human recognition memory, leaving the hypothesis in question. This article demonstrates that predictive coding, an established model previously shown to effectively support representation learning and memory, can also naturally discriminate novelty with high capacity. The predictive coding model includes neurons encoding prediction errors, and we show that these neurons produce higher activity for novel stimuli, so that the novelty can be decoded from their activity. Additionally, hierarchical predictive coding networks detect novelty at different levels of abstraction within the hierarchy, from low-level sensory features like arrangements of pixels to high-level semantic features like object identities. Overall, based on predictive coding, this article establishes a unified framework that brings together novelty detection, associative memory, and representation learning, demonstrating that a single model can capture these various cognitive functions.

预测编码模型在不同的表示层次上检测新颖性。

新颖性检测,也被称为熟悉辨别或识别记忆,是指区分以前是否见过刺激的能力。据推测,新颖性检测可以自然地出现在存储记忆或学习有效神经表征的网络中,因为这些网络已经存储了熟悉刺激的信息。然而,支持这一想法的现有计算模型尚未重现人类识别记忆的高容量,这使假设受到质疑。本文表明,预测编码作为一种已建立的模型,能够有效地支持表征学习和记忆,也能以高容量自然地区分新颖性。预测编码模型包括对预测误差进行编码的神经元,我们发现这些神经元对新刺激产生更高的活动,因此可以从它们的活动中解码新颖性。此外,分层预测编码网络在分层的不同抽象层次上检测新颖性,从低级感官特征(如像素的排列)到高级语义特征(如对象身份)。总的来说,基于预测编码,本文建立了一个统一的框架,将新颖性检测、联想记忆和表征学习结合在一起,证明了一个单一的模型可以捕获这些不同的认知功能。

本文章由计算机程序翻译,如有差异,请以英文原文为准。

求助全文

约1分钟内获得全文

求助全文

来源期刊

Neural Computation

工程技术-计算机:人工智能

CiteScore

6.30

自引率

3.40%

发文量

83

审稿时长

3.0 months

期刊介绍:

Neural Computation is uniquely positioned at the crossroads between neuroscience and TMCS and welcomes the submission of original papers from all areas of TMCS, including: Advanced experimental design; Analysis of chemical sensor data; Connectomic reconstructions; Analysis of multielectrode and optical recordings; Genetic data for cell identity; Analysis of behavioral data; Multiscale models; Analysis of molecular mechanisms; Neuroinformatics; Analysis of brain imaging data; Neuromorphic engineering; Principles of neural coding, computation, circuit dynamics, and plasticity; Theories of brain function.

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: