Exploiting long-term markovian feature importance via dual attention for partially-connected differential architecture search

IF 8

2区 计算机科学

Q1 AUTOMATION & CONTROL SYSTEMS

Engineering Applications of Artificial Intelligence

Pub Date : 2025-06-17

DOI:10.1016/j.engappai.2025.111476

引用次数: 0

Abstract

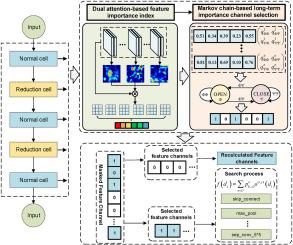

—Differentiable architecture search (DARTS) is implemented as a gradient-based search method for neural architecture generation. However, DARTS suffers from unbalanced competition between unweighted and weighted operations in the search phase of the supernetwork, resulting in a collapse of the search architecture. In this paper, exploiting long-term markovian feature importance via dual attention for partially-connected differential architecture search (MA-DARTS) is proposed, to overcome the excessive accumulation of unweighted operation dominance by reducing redundant features in the supernetwork. First, spatial location attention factors for different semantic groups are learned through spatial attention. The grouped attention approach contributes to capture changes in the spatial semantic importance of search features. Secondly, the channel feature importance is obtained by learning channel attention weights without dimensionality reduction through a one-dimensional convolution factor. Finally, a Markov chain-based long-term importance feature channel selection strategy is designed. This strategy dynamically transmits key features to the search space, which improves the utilization of effective feature information in search. The experimental results demonstrate that MA-DARTS effectively suppresses the problem of excessive proportion of unweighted operations during the search process, achieving better network performance while ensuring the stability of the architecture search. Meanwhile, the proposed method achieves 0.43 %, 0.68 % and 2.2 % accuracy improvement compared to DARTS on Canadian institute for advanced research CIFAR-10, CIFAR-100 and ImageNet datasets.

利用双重注意的长期马尔可夫特征重要性进行部分连通差分架构搜索

可微分结构搜索(DARTS)是一种基于梯度的神经结构生成搜索方法。然而,在超级网络的搜索阶段,非加权和加权操作之间存在不平衡竞争,导致搜索体系结构崩溃。本文提出了一种基于双关注的长期马尔可夫特征重要度的部分连接差分结构搜索(MA-DARTS)方法,通过减少超网络中的冗余特征,克服非加权操作优势度的过度积累。首先,通过空间注意学习不同语义组的空间位置注意因子。分组注意方法有助于捕捉搜索特征空间语义重要性的变化。其次,通过一维卷积因子在不降维的情况下学习信道注意权值,得到信道特征重要度;最后,设计了基于马尔可夫链的长期重要特征信道选择策略。该策略将关键特征动态传递到搜索空间,提高了有效特征信息在搜索中的利用率。实验结果表明,MA-DARTS有效地抑制了搜索过程中未加权操作比例过大的问题,在保证架构搜索稳定性的同时获得了更好的网络性能。同时,在加拿大高等研究院CIFAR-10、CIFAR-100和ImageNet数据集上,与DARTS相比,该方法的准确率分别提高了0.43%、0.68%和2.2%。

本文章由计算机程序翻译,如有差异,请以英文原文为准。

求助全文

约1分钟内获得全文

求助全文

来源期刊

Engineering Applications of Artificial Intelligence

工程技术-工程:电子与电气

CiteScore

9.60

自引率

10.00%

发文量

505

审稿时长

68 days

期刊介绍:

Artificial Intelligence (AI) is pivotal in driving the fourth industrial revolution, witnessing remarkable advancements across various machine learning methodologies. AI techniques have become indispensable tools for practicing engineers, enabling them to tackle previously insurmountable challenges. Engineering Applications of Artificial Intelligence serves as a global platform for the swift dissemination of research elucidating the practical application of AI methods across all engineering disciplines. Submitted papers are expected to present novel aspects of AI utilized in real-world engineering applications, validated using publicly available datasets to ensure the replicability of research outcomes. Join us in exploring the transformative potential of AI in engineering.

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: