Evaluating and mitigating bias in AI-based medical text generation

IF 18.3

Q1 COMPUTER SCIENCE, INTERDISCIPLINARY APPLICATIONS

引用次数: 0

Abstract

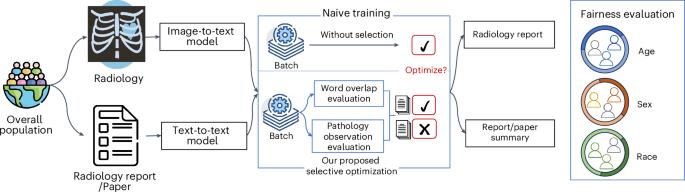

Artificial intelligence (AI) systems, particularly those based on deep learning models, have increasingly achieved expert-level performance in medical applications. However, there is growing concern that such AI systems may reflect and amplify human bias, reducing the quality of their performance in historically underserved populations. The fairness issue has attracted considerable research interest in the medical imaging classification field, yet it remains understudied in the text-generation domain. In this study, we investigate the fairness problem in text generation within the medical field and observe substantial performance discrepancies across different races, sexes and age groups, including intersectional groups, various model scales and different evaluation metrics. To mitigate this fairness issue, we propose an algorithm that selectively optimizes those underserved groups to reduce bias. Our evaluations across multiple backbones, datasets and modalities demonstrate that our proposed algorithm enhances fairness in text generation without compromising overall performance. This study evaluates bias in AI-generated medical text, revealing disparities across race, sex and age. An optimization method is proposed to enhance fairness without compromising performance, offering a step toward more equitable AI in healthcare.

评估和减轻基于人工智能的医学文本生成中的偏见。

人工智能(AI)系统,特别是基于深度学习模型的系统,在医疗应用中越来越多地实现了专家级的性能。然而,人们越来越担心,这种人工智能系统可能会反映和放大人类的偏见,从而降低它们在历史上服务不足的人群中的表现质量。公平性问题在医学影像分类领域引起了相当大的研究兴趣,但在文本生成领域仍未得到充分的研究。在本研究中,我们研究了医学领域文本生成中的公平性问题,并观察了不同种族、性别和年龄组(包括交叉组、不同模型尺度和不同评估指标)的显著表现差异。为了缓解这种公平性问题,我们提出了一种算法,可以选择性地优化那些服务不足的群体以减少偏见。我们对多个主干、数据集和模式的评估表明,我们提出的算法在不影响整体性能的情况下提高了文本生成的公平性。

本文章由计算机程序翻译,如有差异,请以英文原文为准。

求助全文

约1分钟内获得全文

求助全文

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: