Back to recurrent processing at the crossroad of transformers and state-space models

IF 23.9

1区 计算机科学

Q1 COMPUTER SCIENCE, ARTIFICIAL INTELLIGENCE

引用次数: 0

Abstract

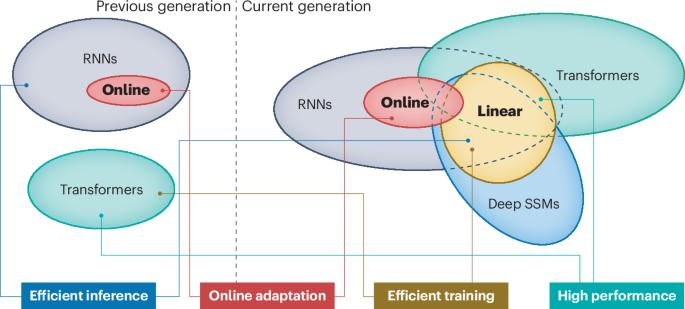

It is a longstanding challenge for the machine learning community to develop models that are capable of processing and learning from long sequences of data. The exceptional results of transformer-based approaches, such as large language models, promote the idea of parallel attention as the key to succeed in such a challenge, temporarily obscuring the role of classic sequential processing of recurrent models. However, in the past few years, a new generation of neural models has emerged, combining transformers and recurrent networks motivated by concerns over the quadratic complexity of self-attention. Meanwhile, (deep) state-space models have also emerged as robust approaches to function approximation over time, thus opening a new perspective in learning from sequential data. Here we provide an overview of these trends unified under the umbrella of recurrent models, and discuss their likely crucial impact in the development of future architectures for large generative models. While transformers and large language models excel at efficiently processing long sequences, new approaches have been proposed that incorporate recurrence to overcome the quadratic cost of self-attention. Tiezzi et al. discuss recurrent and state-space models and the promise they hold for future sequence processing networks.

回到变压器和状态空间模型交叉路口的循环处理

对于机器学习社区来说,开发能够处理和学习长序列数据的模型是一个长期的挑战。基于转换器的方法(如大型语言模型)的特殊结果,促进了并行注意力作为成功应对此类挑战的关键的想法,暂时模糊了循环模型的经典顺序处理的作用。然而,在过去的几年里,新一代的神经模型出现了,结合了变压器和循环网络,这是出于对自我关注的二次复杂性的关注。同时,随着时间的推移,(深度)状态空间模型也作为函数逼近的鲁棒方法出现,从而为从序列数据中学习开辟了新的视角。在这里,我们提供了这些趋势的概述,这些趋势统一在循环模型的保护伞下,并讨论了它们在大型生成模型的未来架构开发中可能产生的关键影响。

本文章由计算机程序翻译,如有差异,请以英文原文为准。

求助全文

约1分钟内获得全文

求助全文

来源期刊

Nature Machine Intelligence

Multiple-

CiteScore

36.90

自引率

2.10%

发文量

127

期刊介绍:

Nature Machine Intelligence is a distinguished publication that presents original research and reviews on various topics in machine learning, robotics, and AI. Our focus extends beyond these fields, exploring their profound impact on other scientific disciplines, as well as societal and industrial aspects. We recognize limitless possibilities wherein machine intelligence can augment human capabilities and knowledge in domains like scientific exploration, healthcare, medical diagnostics, and the creation of safe and sustainable cities, transportation, and agriculture. Simultaneously, we acknowledge the emergence of ethical, social, and legal concerns due to the rapid pace of advancements.

To foster interdisciplinary discussions on these far-reaching implications, Nature Machine Intelligence serves as a platform for dialogue facilitated through Comments, News Features, News & Views articles, and Correspondence. Our goal is to encourage a comprehensive examination of these subjects.

Similar to all Nature-branded journals, Nature Machine Intelligence operates under the guidance of a team of skilled editors. We adhere to a fair and rigorous peer-review process, ensuring high standards of copy-editing and production, swift publication, and editorial independence.

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: