LatentPINNs: Generative physics-informed neural networks via a latent representation learning

IF 4.2

引用次数: 0

Abstract

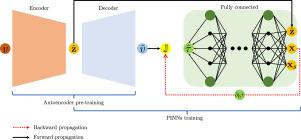

Physics-informed neural networks (PINNs) are promising to replace conventional mesh-based partial differential equation (PDE) solvers by offering more accurate and flexible PDE solutions. However, PINNs are hampered by the relatively slow convergence and the need to perform additional, potentially expensive training for new PDE parameters. To solve this limitation, we introduce LatentPINN, a framework that utilizes latent representations of the PDE parameters as additional (to the coordinates) inputs into PINNs and allows for training over the distribution of these parameters. Motivated by the recent progress on generative models, we promote using latent diffusion models to learn compressed latent representations of the distribution of PDE parameters as they act as input parameters for NN functional solutions. We use a two-stage training scheme in which, in the first stage, we learn the latent representations for the distribution of PDE parameters. In the second stage, we train a physics-informed neural network over inputs given by randomly drawn samples from the coordinate space within the solution domain and samples from the learned latent representation of the PDE parameters. Considering their importance in capturing evolving interfaces and fronts in various fields, we test the approach on a class of level set equations given, for example, by the nonlinear Eikonal equation. We share results corresponding to three Eikonal parameters (velocity models) sets. The proposed method performs well on new phase velocity models without the need for any additional training.

latentpinn:基于潜在表征学习的生成物理信息神经网络

基于物理信息的神经网络(pinn)有望通过提供更准确、更灵活的偏微分方程(PDE)解决方案,取代传统的基于网格的偏微分方程(PDE)求解器。然而,pinn的收敛速度相对较慢,并且需要对新的PDE参数进行额外的、可能昂贵的训练,这阻碍了它的发展。为了解决这个限制,我们引入了LatentPINN,这是一个框架,它利用PDE参数的潜在表示作为pinn的附加(坐标)输入,并允许在这些参数的分布上进行训练。由于生成模型的最新进展,我们提倡使用潜在扩散模型来学习PDE参数分布的压缩潜在表示,因为它们作为神经网络函数解的输入参数。我们使用了一个两阶段的训练方案,在第一阶段,我们学习PDE参数分布的潜在表示。在第二阶段,我们通过从解域内的坐标空间随机抽取的样本和从学习到的PDE参数的潜在表示中抽取的样本给出的输入训练一个物理信息的神经网络。考虑到它们在捕捉各个领域中不断变化的界面和前沿方面的重要性,我们在一类给定的水平集方程上测试了该方法,例如,由非线性Eikonal方程给出的水平集方程。我们共享了对应于三个Eikonal参数(速度模型)集的结果。该方法在新的相速度模型上表现良好,无需额外的训练。

本文章由计算机程序翻译,如有差异,请以英文原文为准。

求助全文

约1分钟内获得全文

求助全文

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: