Bias recognition and mitigation strategies in artificial intelligence healthcare applications

IF 12.4

1区 医学

Q1 HEALTH CARE SCIENCES & SERVICES

引用次数: 0

Abstract

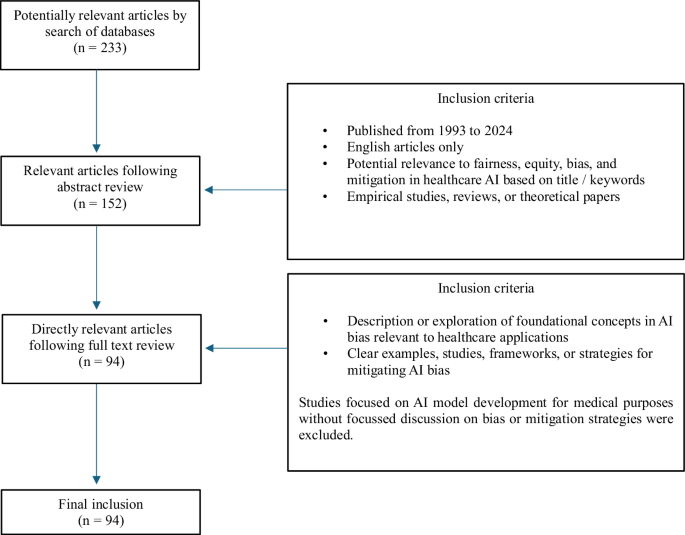

Artificial intelligence (AI) is delivering value across all aspects of clinical practice. However, bias may exacerbate healthcare disparities. This review examines the origins of bias in healthcare AI, strategies for mitigation, and responsibilities of relevant stakeholders towards achieving fair and equitable use. We highlight the importance of systematically identifying bias and engaging relevant mitigation activities throughout the AI model lifecycle, from model conception through to deployment and longitudinal surveillance.

人工智能(AI)正在为临床实践的各个方面带来价值。然而,偏见可能会加剧医疗差距。本综述探讨了医疗人工智能中偏见的起源、减轻偏见的策略以及相关利益方在实现公平公正使用方面的责任。我们强调了在整个人工智能模型生命周期(从模型构思到部署和纵向监控)中系统识别偏差并参与相关缓解活动的重要性。

本文章由计算机程序翻译,如有差异,请以英文原文为准。

求助全文

约1分钟内获得全文

求助全文

来源期刊

NPJ Digital Medicine

Multiple-

CiteScore

25.10

自引率

3.30%

发文量

170

审稿时长

15 weeks

期刊介绍:

npj Digital Medicine is an online open-access journal that focuses on publishing peer-reviewed research in the field of digital medicine. The journal covers various aspects of digital medicine, including the application and implementation of digital and mobile technologies in clinical settings, virtual healthcare, and the use of artificial intelligence and informatics.

The primary goal of the journal is to support innovation and the advancement of healthcare through the integration of new digital and mobile technologies. When determining if a manuscript is suitable for publication, the journal considers four important criteria: novelty, clinical relevance, scientific rigor, and digital innovation.

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: