Teaching robots to build simulations of themselves

IF 18.8

1区 计算机科学

Q1 COMPUTER SCIENCE, ARTIFICIAL INTELLIGENCE

引用次数: 0

Abstract

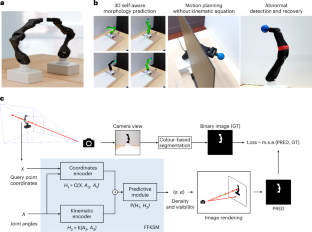

The emergence of vision catalysed a pivotal evolutionary advancement, enabling organisms not only to perceive but also to interact intelligently with their environment. This transformation is mirrored by the evolution of robotic systems, where the ability to leverage vision to simulate and predict their own dynamics marks a leap towards autonomy and self-awareness. Humans utilize vision to record experiences and internally simulate potential actions. For example, we can imagine that, if we stand up and raise our arms, the body will form a ‘T’ shape without physical movement. Similarly, simulation allows robots to plan and predict the outcomes of potential actions without execution. Here we introduce a self-supervised learning framework to enable robots to model and predict their morphology, kinematics and motor control using only brief raw video data, eliminating the need for extensive real-world data collection and kinematic priors. By observing their own movements, akin to humans watching their reflection in a mirror, robots learn an ability to simulate themselves and predict their spatial motion for various tasks. Our results demonstrate that this self-learned simulation not only enables accurate motion planning but also allows the robot to detect abnormalities and recover from damage. Motion planning for a robot generally requires full knowledge of its structure. Here Hu and colleagues present a method for inferring the structure of a robot from visual information.

教机器人模拟自己

视觉的出现催化了关键的进化进程,使生物体不仅能够感知环境,而且能够与环境进行智能互动。机器人系统的发展也反映了这种转变,利用视觉模拟和预测自身动态的能力标志着机器人向自主和自我意识的飞跃。人类利用视觉来记录经验,并在内部模拟潜在的行为。例如,我们可以想象,如果我们站起来,举起我们的手臂,身体将形成一个“T”形没有物理运动。类似地,模拟允许机器人在不执行的情况下计划和预测潜在动作的结果。在这里,我们引入了一个自监督学习框架,使机器人能够仅使用简短的原始视频数据建模和预测其形态,运动学和电机控制,从而消除了对广泛的现实世界数据收集和运动学先验的需要。通过观察自己的动作,类似于人类在镜子中观察自己的倒影,机器人学会了模拟自己的能力,并预测自己在各种任务中的空间运动。我们的研究结果表明,这种自我学习的模拟不仅可以实现准确的运动规划,而且可以让机器人检测异常并从损伤中恢复。

本文章由计算机程序翻译,如有差异,请以英文原文为准。

求助全文

约1分钟内获得全文

求助全文

来源期刊

Nature Machine Intelligence

Multiple-

CiteScore

36.90

自引率

2.10%

发文量

127

期刊介绍:

Nature Machine Intelligence is a distinguished publication that presents original research and reviews on various topics in machine learning, robotics, and AI. Our focus extends beyond these fields, exploring their profound impact on other scientific disciplines, as well as societal and industrial aspects. We recognize limitless possibilities wherein machine intelligence can augment human capabilities and knowledge in domains like scientific exploration, healthcare, medical diagnostics, and the creation of safe and sustainable cities, transportation, and agriculture. Simultaneously, we acknowledge the emergence of ethical, social, and legal concerns due to the rapid pace of advancements.

To foster interdisciplinary discussions on these far-reaching implications, Nature Machine Intelligence serves as a platform for dialogue facilitated through Comments, News Features, News & Views articles, and Correspondence. Our goal is to encourage a comprehensive examination of these subjects.

Similar to all Nature-branded journals, Nature Machine Intelligence operates under the guidance of a team of skilled editors. We adhere to a fair and rigorous peer-review process, ensuring high standards of copy-editing and production, swift publication, and editorial independence.

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: