Fusion-Decomposition Pan-Sharpening Network With Interactive Learning of Representation Graph

IF 4.7

2区 地球科学

Q1 ENGINEERING, ELECTRICAL & ELECTRONIC

IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing

Pub Date : 2024-12-31

DOI:10.1109/JSTARS.2024.3524386

引用次数: 0

Abstract

Deep learning (DL)-based pan-sharpening methods have become mainstream due to their exceptional performance. However, the lack of ground truth for supervised learning forces most DL-based methods to use pseudoground truth multispectral images, limiting learning potential and constraining the model's solution space. Unsupervised methods often neglect mutual learning across modalities, leading to insufficient spatial details in pan-sharpened images. To address these issues, this study proposes a fusion-decomposition pan-sharpening model based on interactive learning of representation graphs. This model considers both the compression process from source images to fused results and the decomposition process back to the source images. It aims to leveraging feature consistency between these processes to enhance the spatial and spectral consistency learned by the fusion network in a data-driven manner. Specifically, the fusion network incorporates the meticulously designed representational graph interaction module and the graph interaction fusion module. These modules construct a representational graph structure for cross-modal feature communication, generating a global representation that guides the cross-modal semantic aggregation of multispectral and panchromatic data. In the decomposition network, the spatial structure perception module and the spectral feature extraction module, designed based on the attributes of the source image features, enable the network to better perceive and reconstruct multispectral and panchromatic data from the fused result. This, in turn, enhances the fusion network's perception of spectral information and spatial structure. Qualitative and quantitative results on the IKONOS, GaoFen-2, WorldView-2, and WorldView-3 datasets validate the effectiveness of the proposed method in comparison to other state-of-the-art methods.求助全文

约1分钟内获得全文

求助全文

来源期刊

CiteScore

9.30

自引率

10.90%

发文量

563

审稿时长

4.7 months

期刊介绍:

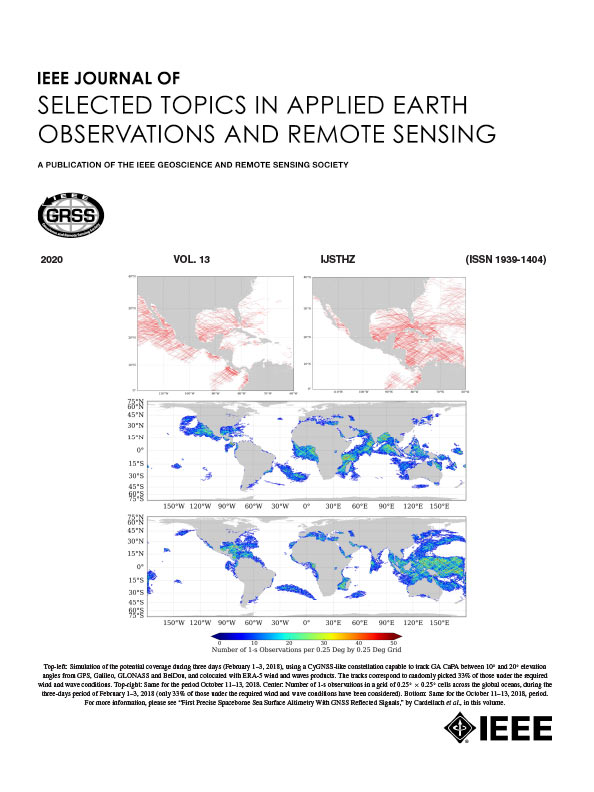

The IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing addresses the growing field of applications in Earth observations and remote sensing, and also provides a venue for the rapidly expanding special issues that are being sponsored by the IEEE Geosciences and Remote Sensing Society. The journal draws upon the experience of the highly successful “IEEE Transactions on Geoscience and Remote Sensing” and provide a complementary medium for the wide range of topics in applied earth observations. The ‘Applications’ areas encompasses the societal benefit areas of the Global Earth Observations Systems of Systems (GEOSS) program. Through deliberations over two years, ministers from 50 countries agreed to identify nine areas where Earth observation could positively impact the quality of life and health of their respective countries. Some of these are areas not traditionally addressed in the IEEE context. These include biodiversity, health and climate. Yet it is the skill sets of IEEE members, in areas such as observations, communications, computers, signal processing, standards and ocean engineering, that form the technical underpinnings of GEOSS. Thus, the Journal attracts a broad range of interests that serves both present members in new ways and expands the IEEE visibility into new areas.

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: