Sequential memory improves sample and memory efficiency in episodic control

IF 18.8

1区 计算机科学

Q1 COMPUTER SCIENCE, ARTIFICIAL INTELLIGENCE

引用次数: 0

Abstract

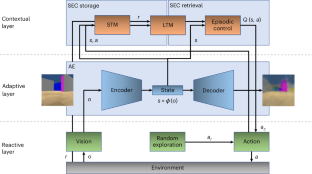

Deep reinforcement learning algorithms are known for their sample inefficiency, requiring extensive episodes to reach optimal performance. Episodic reinforcement learning algorithms aim to overcome this issue by using extended memory systems to leverage past experiences. However, these memory augmentations are often used as mere buffers, from which isolated events are resampled for offline learning (for example, replay). In this Article, we introduce Sequential Episodic Control (SEC), a hippocampal-inspired model that stores entire event sequences in their temporal order and employs a sequential bias in their retrieval to guide actions. We evaluate SEC across various benchmarks from the Animal-AI testbed, demonstrating its superior performance and sample efficiency compared to several state-of-the-art models, including Model-Free Episodic Control, Deep Q-Network and Episodic Reinforcement Learning with Associative Memory. Our experiments show that SEC achieves higher rewards and faster policy convergence in tasks requiring memory and decision-making. Additionally, we investigate the effects of memory constraints and forgetting mechanisms, revealing that prioritized forgetting enhances both performance and policy stability. Further, ablation studies demonstrate the critical role of the sequential memory component in SEC. Finally, we discuss how fast, sequential hippocampal-like episodic memory systems could support both habit formation and deliberation in artificial and biological systems. Previous studies have explored the integration of episodic memory into reinforcement learning and control. Inspired by hippocampal memory, Freire et al. develop a model that improves learning speed and stability by storing experiences as sequences, demonstrating resilience and efficiency under memory constraints.

顺序记忆提高了情景控制的样本和记忆效率

深度强化学习算法以其样本效率低下而闻名,需要大量的剧集才能达到最佳性能。情景强化学习算法旨在通过使用扩展记忆系统来利用过去的经验来克服这个问题。然而,这些内存增强通常仅仅用作缓冲区,从中重新采样孤立的事件以进行离线学习(例如,重播)。在本文中,我们介绍了顺序情景控制(SEC),这是一种受海马启发的模型,它按时间顺序存储整个事件序列,并在检索过程中使用顺序偏差来指导行动。我们通过动物人工智能测试平台的各种基准对SEC进行了评估,与几种最先进的模型(包括无模型情景控制、深度q -网络和带有联想记忆的情景强化学习)相比,证明了SEC的卓越性能和样本效率。我们的实验表明,SEC在需要记忆和决策的任务中实现了更高的奖励和更快的策略收敛。此外,我们研究了记忆约束和遗忘机制的影响,揭示了优先遗忘提高了性能和策略稳定性。此外,消融研究证明了顺序记忆成分在SEC中的关键作用。最后,我们讨论了在人工和生物系统中,顺序海马样情景记忆系统如何快速地支持习惯形成和思考。

本文章由计算机程序翻译,如有差异,请以英文原文为准。

求助全文

约1分钟内获得全文

求助全文

来源期刊

Nature Machine Intelligence

Multiple-

CiteScore

36.90

自引率

2.10%

发文量

127

期刊介绍:

Nature Machine Intelligence is a distinguished publication that presents original research and reviews on various topics in machine learning, robotics, and AI. Our focus extends beyond these fields, exploring their profound impact on other scientific disciplines, as well as societal and industrial aspects. We recognize limitless possibilities wherein machine intelligence can augment human capabilities and knowledge in domains like scientific exploration, healthcare, medical diagnostics, and the creation of safe and sustainable cities, transportation, and agriculture. Simultaneously, we acknowledge the emergence of ethical, social, and legal concerns due to the rapid pace of advancements.

To foster interdisciplinary discussions on these far-reaching implications, Nature Machine Intelligence serves as a platform for dialogue facilitated through Comments, News Features, News & Views articles, and Correspondence. Our goal is to encourage a comprehensive examination of these subjects.

Similar to all Nature-branded journals, Nature Machine Intelligence operates under the guidance of a team of skilled editors. We adhere to a fair and rigorous peer-review process, ensuring high standards of copy-editing and production, swift publication, and editorial independence.

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: