Computer-assisted diagnosis for axillary lymph node metastasis of early breast cancer based on transformer with dual-modal adaptive mid-term fusion using ultrasound elastography

IF 5.4

2区 医学

Q1 ENGINEERING, BIOMEDICAL

Computerized Medical Imaging and Graphics

Pub Date : 2024-11-26

DOI:10.1016/j.compmedimag.2024.102472

引用次数: 0

Abstract

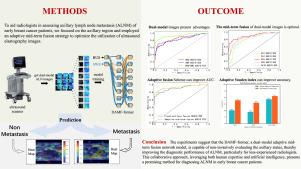

Accurate preoperative qualitative assessment of axillary lymph node metastasis (ALNM) in early breast cancer patients is crucial for precise clinical staging and selection of axillary treatment strategies. Although previous studies have introduced artificial intelligence (AI) to enhance the assessment performance of ALNM, they all focus on the prediction performances of their AI models and neglect the clinical assistance to the radiologists, which brings some issues to the clinical practice. To this end, we propose a human–AI collaboration strategy for ALNM diagnosis of early breast cancer, in which a novel deep learning framework, termed DAMF-former, is designed to assist radiologists in evaluating ALNM. Specifically, the DAMF-former focuses on the axillary region rather than the primary tumor area in previous studies. To mimic the radiologists’ alternative integration of the UE images of the target axillary lymph nodes for comprehensive analysis, adaptive mid-term fusion is proposed to alternatively extract and adaptively fuse the high-level features from the dual-modal UE images (i.e., B-mode ultrasound and Shear Wave Elastography). To further improve the diagnostic outcome of the DAMF-former, an adaptive Youden index scheme is proposed to deal with the fully fused dual-modal UE image features at the end of the framework, which can balance the diagnostic performance in terms of sensitivity and specificity. The clinical experiment indicates that the designed DAMF-former can assist and improve the diagnostic abilities of less-experienced radiologists for ALNM. Especially, the junior radiologists can significantly improve the diagnostic outcome from 0.807 AUC [95% CI: 0.781, 0.830] to 0.883 AUC [95% CI: 0.861, 0.902] (-value 0.0001). Moreover, there are great agreements among radiologists of different levels when assisted by the DAMF-former (Kappa value ranging from 0.805 to 0.895; -value 0.0001), suggesting that less-experienced radiologists can potentially achieve a diagnostic level similar to that of experienced radiologists through human–AI collaboration. This study explores a potential solution to human–AI collaboration for ALNM diagnosis based on UE images.

超声弹性成像双模态自适应中期融合变压器对早期乳腺癌腋窝淋巴结转移的计算机辅助诊断

早期乳腺癌患者腋窝淋巴结转移(ALNM)的术前准确定性评估对于准确的临床分期和选择腋窝治疗策略至关重要。虽然以往的研究引入了人工智能(AI)来提高ALNM的评估性能,但都侧重于人工智能模型的预测性能,忽视了对放射科医生的临床辅助,这给临床实践带来了一些问题。为此,我们提出了一种用于早期乳腺癌ALNM诊断的人类-人工智能协作策略,其中设计了一种称为DAMF-former的新型深度学习框架,以协助放射科医生评估ALNM。具体而言,在以往的研究中,DAMF-former侧重于腋窝区域而不是原发肿瘤区域。为了模仿放射科医生对目标腋窝淋巴结UE图像的替代整合进行综合分析,提出了自适应中期融合,从双模UE图像(即b超和横波弹性成像)中交替提取和自适应融合高级特征。为了进一步提高DAMF-former的诊断效果,提出了一种自适应的约登指数方案来处理框架末端完全融合的双峰UE图像特征,从而在敏感性和特异性方面平衡诊断性能。临床实验表明,所设计的DAMF-former能够辅助和提高经验不足的放射科医师对ALNM的诊断能力。特别是,初级放射科医师可以显著改善诊断结果,从0.807 AUC [95% CI: 0.781, 0.830]提高到0.883 AUC [95% CI: 0.861, 0.902] (p值<;0.0001)。此外,在DAMF-former (Kappa值为0.805 ~ 0.895;p值<;0.0001),这表明经验不足的放射科医生可以通过人类与人工智能的合作达到与经验丰富的放射科医生相似的诊断水平。本研究探索了一种基于UE图像的人工智能协同诊断ALNM的潜在解决方案。

本文章由计算机程序翻译,如有差异,请以英文原文为准。

求助全文

约1分钟内获得全文

求助全文

来源期刊

CiteScore

10.70

自引率

3.50%

发文量

71

审稿时长

26 days

期刊介绍:

The purpose of the journal Computerized Medical Imaging and Graphics is to act as a source for the exchange of research results concerning algorithmic advances, development, and application of digital imaging in disease detection, diagnosis, intervention, prevention, precision medicine, and population health. Included in the journal will be articles on novel computerized imaging or visualization techniques, including artificial intelligence and machine learning, augmented reality for surgical planning and guidance, big biomedical data visualization, computer-aided diagnosis, computerized-robotic surgery, image-guided therapy, imaging scanning and reconstruction, mobile and tele-imaging, radiomics, and imaging integration and modeling with other information relevant to digital health. The types of biomedical imaging include: magnetic resonance, computed tomography, ultrasound, nuclear medicine, X-ray, microwave, optical and multi-photon microscopy, video and sensory imaging, and the convergence of biomedical images with other non-imaging datasets.

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: