Fooling human detectors via robust and visually natural adversarial patches

IF 5.5

2区 计算机科学

Q1 COMPUTER SCIENCE, ARTIFICIAL INTELLIGENCE

引用次数: 0

Abstract

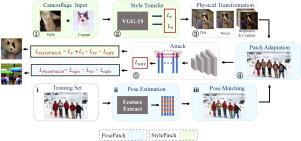

DNNs are vulnerable to adversarial attacks. Physical attacks alter local regions of images by either physically equipping crafted objects or synthesizing adversarial patches. This design is applicable to real-world image capturing scenarios. Currently, adversarial patches are typically generated from random noise. Their textures are different from image textures. Also, these patches are developed without focusing on the relationship between human poses and adversarial robustness. The unnatural pose and texture make patches noticeable in practice. In this work, we propose to synthesize adversarial patches which are visually natural from the perspectives of both poses and textures. In order to adapt adversarial patches to human pose, we propose a patch adaption network PosePatch for patch synthesis, which is guided by perspective transform with estimated human poses. Meanwhile, we develop a network StylePatch to generate harmonized textures for adversarial patches. These networks are combined together for end-to-end training. As a result, our method can synthesize adversarial patches for arbitrary human images without knowing poses and localization in advance. Experiments on benchmark datasets and real-world scenarios show that our method is robust to human pose variations and synthesized adversarial patches are effective, and a user study is made to validate the naturalness.

求助全文

约1分钟内获得全文

求助全文

来源期刊

Neurocomputing

工程技术-计算机:人工智能

CiteScore

13.10

自引率

10.00%

发文量

1382

审稿时长

70 days

期刊介绍:

Neurocomputing publishes articles describing recent fundamental contributions in the field of neurocomputing. Neurocomputing theory, practice and applications are the essential topics being covered.

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: