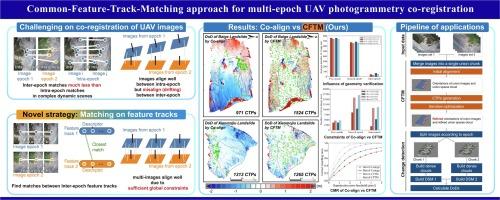

Common-feature-track-matching approach for multi-epoch UAV photogrammetry co-registration

IF 10.6

1区 地球科学

Q1 GEOGRAPHY, PHYSICAL

ISPRS Journal of Photogrammetry and Remote Sensing

Pub Date : 2024-11-14

DOI:10.1016/j.isprsjprs.2024.10.025

引用次数: 0

Abstract

Automatic co-registration of multi-epoch Unmanned Aerial Vehicle (UAV) image sets remains challenging due to the radiometric differences in complex dynamic scenes. Specifically, illumination changes and vegetation variations usually lead to insufficient and spatially unevenly distributed common tie points (CTPs), resulting in under-fitting of co-registration near the areas without CTPs. In this paper, we propose a novel Common-Feature-Track-Matching (CFTM) approach for UAV image sets co-registration, to alleviate the shortage of CTPs in complex dynamic scenes. Instead of matching features between multi-epoch images, we first search correspondences between multi-epoch feature tracks (i.e., groups of features corresponding to the same 3D points), which avoids the removal of matches due to unreliable estimation of the relative pose between inter-epoch image pairs. Then, the CTPs are triangulated from the successfully matched track pairs. Since an even distribution of CTPs is crucial for robust co-registration, a block-based strategy is designed, as well as enabling parallel computation. Finally, an iterative optimization algorithm is developed to gradually select the best CTPs to refine the poses of multi-epoch images. We assess the performance of our method on two challenging datasets. The results show that CFTM can automatically acquire adequate and evenly distributed CTPs in complex dynamic scenes, achieving a high co-registration accuracy approximately four times higher than the state-of-the-art in challenging scenario. Our code is available at https://github.com/lixinlong1998/CoSfM.

多波段无人机摄影测量共准法的共同特征轨迹匹配方法

由于复杂动态场景中的辐射测量差异,多波长无人机(UAV)图像集的自动共配准仍然具有挑战性。具体来说,光照变化和植被变化通常会导致公共连接点(CTP)不足且在空间上分布不均,从而导致在没有公共连接点的区域附近的协同注册拟合不足。在本文中,我们提出了一种用于无人机图像集协同注册的新型公共特征轨迹匹配(CFTM)方法,以缓解复杂动态场景中公共连接点不足的问题。我们首先搜索多时序特征轨迹(即对应于相同三维点的特征组)之间的对应关系,而不是匹配多时序图像之间的特征,这避免了由于对时序间图像对的相对姿态估计不可靠而删除匹配。然后,根据成功匹配的轨迹对进行 CTP 三角测量。由于 CTPs 的均匀分布对稳健的协同注册至关重要,因此设计了一种基于块的策略,并实现了并行计算。最后,我们开发了一种迭代优化算法,以逐步选择最佳 CTP,从而完善多波段图像的姿态。我们在两个具有挑战性的数据集上评估了我们方法的性能。结果表明,CFTM 可以在复杂的动态场景中自动获取足够且分布均匀的 CTP,在具有挑战性的场景中实现了比最先进方法高约四倍的高协同注册精度。我们的代码见 https://github.com/lixinlong1998/CoSfM。

本文章由计算机程序翻译,如有差异,请以英文原文为准。

求助全文

约1分钟内获得全文

求助全文

来源期刊

ISPRS Journal of Photogrammetry and Remote Sensing

工程技术-成像科学与照相技术

CiteScore

21.00

自引率

6.30%

发文量

273

审稿时长

40 days

期刊介绍:

The ISPRS Journal of Photogrammetry and Remote Sensing (P&RS) serves as the official journal of the International Society for Photogrammetry and Remote Sensing (ISPRS). It acts as a platform for scientists and professionals worldwide who are involved in various disciplines that utilize photogrammetry, remote sensing, spatial information systems, computer vision, and related fields. The journal aims to facilitate communication and dissemination of advancements in these disciplines, while also acting as a comprehensive source of reference and archive.

P&RS endeavors to publish high-quality, peer-reviewed research papers that are preferably original and have not been published before. These papers can cover scientific/research, technological development, or application/practical aspects. Additionally, the journal welcomes papers that are based on presentations from ISPRS meetings, as long as they are considered significant contributions to the aforementioned fields.

In particular, P&RS encourages the submission of papers that are of broad scientific interest, showcase innovative applications (especially in emerging fields), have an interdisciplinary focus, discuss topics that have received limited attention in P&RS or related journals, or explore new directions in scientific or professional realms. It is preferred that theoretical papers include practical applications, while papers focusing on systems and applications should include a theoretical background.

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: