An explainable fast deep neural network for emotion recognition

IF 4.9

2区 医学

Q1 ENGINEERING, BIOMEDICAL

引用次数: 0

Abstract

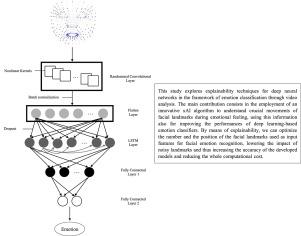

In the context of artificial intelligence, the inherent human attribute of engaging in logical reasoning to facilitate decision-making is mirrored by the concept of explainability, which pertains to the ability of a model to provide a clear and interpretable account of how it arrived at a particular outcome. This study explores explainability techniques for binary deep neural architectures in the framework of emotion classification through video analysis. We investigate the optimization of input features to binary classifiers for emotion recognition, with face landmarks detection, using an improved version of the Integrated Gradients explainability method. The main contribution of this paper consists of the employment of an innovative explainable artificial intelligence algorithm to understand the crucial facial landmarks movements typical of emotional feeling, using this information for improving the performance of deep learning-based emotion classifiers. By means of explainability, we can optimize the number and the position of the facial landmarks used as input features for facial emotion recognition, lowering the impact of noisy landmarks and thus increasing the accuracy of the developed models. To test the effectiveness of the proposed approach, we considered a set of deep binary models for emotion classification, trained initially with a complete set of facial landmarks, which are progressively reduced basing the decision on a suitable optimization procedure. The obtained results prove the robustness of the proposed explainable approach in terms of understanding the relevance of the different facial points for the different emotions, improving the classification accuracy and diminishing the computational cost.

用于情感识别的可解释快速深度神经网络

在人工智能领域,可解释性的概念反映了人类参与逻辑推理以促进决策的固有属性,这一概念涉及模型对其如何得出特定结果提供清晰、可解释的说明的能力。本研究在通过视频分析进行情感分类的框架内,探索二元深度神经架构的可解释性技术。我们使用改进版的 "集成梯度 "可解释性方法,研究了如何优化二元分类器的输入特征,以进行情绪识别和人脸地标检测。本文的主要贡献在于采用了一种创新的可解释人工智能算法来理解典型情绪感受的关键面部地标运动,并利用这些信息来提高基于深度学习的情绪分类器的性能。通过可解释性,我们可以优化作为面部情绪识别输入特征的面部地标的数量和位置,降低噪声地标的影响,从而提高所开发模型的准确性。为了测试所提方法的有效性,我们考虑了一组用于情绪分类的深度二元模型,这些模型最初是用一组完整的面部地标训练的,然后根据适当的优化程序逐步减少这些地标。所获得的结果证明了所提出的可解释方法在理解不同面部点与不同情绪的相关性、提高分类准确性和降低计算成本方面的稳健性。

本文章由计算机程序翻译,如有差异,请以英文原文为准。

求助全文

约1分钟内获得全文

求助全文

来源期刊

Biomedical Signal Processing and Control

工程技术-工程:生物医学

CiteScore

9.80

自引率

13.70%

发文量

822

审稿时长

4 months

期刊介绍:

Biomedical Signal Processing and Control aims to provide a cross-disciplinary international forum for the interchange of information on research in the measurement and analysis of signals and images in clinical medicine and the biological sciences. Emphasis is placed on contributions dealing with the practical, applications-led research on the use of methods and devices in clinical diagnosis, patient monitoring and management.

Biomedical Signal Processing and Control reflects the main areas in which these methods are being used and developed at the interface of both engineering and clinical science. The scope of the journal is defined to include relevant review papers, technical notes, short communications and letters. Tutorial papers and special issues will also be published.

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: