Fast and generalizable micromagnetic simulation with deep neural nets

IF 18.8

1区 计算机科学

Q1 COMPUTER SCIENCE, ARTIFICIAL INTELLIGENCE

引用次数: 0

Abstract

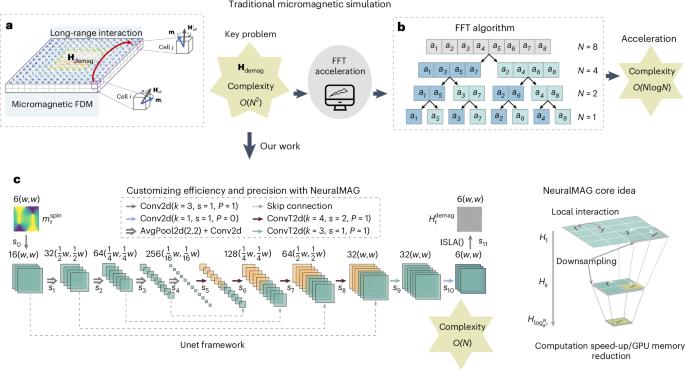

Important progress has been made in micromagnetics, driven by its wide-ranging applications in magnetic storage design. Numerical simulation, a cornerstone of micromagnetics research, relies on first-principles rules to compute the dynamic evolution of micromagnetic systems using the renowned Landau–Lifshitz–Gilbert equation, named after Landau, Lifshitz and Gilbert. However, these simulations are often hindered by their slow speeds. Although fast Fourier transformation calculations reduce the computational complexity to O(Nlog(N)), it remains impractical for large-scale simulations. Here we introduce NeuralMAG, a deep learning approach to micromagnetic simulation. Our approach follows the Landau–Lifshitz–Gilbert iterative framework but accelerates computation of demagnetizing fields by employing a U-shaped neural network. This neural network architecture comprises an encoder that extracts aggregated spins at various scales and learns the local interaction at each scale, followed by a decoder that accumulates the local interactions at different scales to approximate the global convolution. This divide-and-accumulate scheme achieves a time complexity of O(N), notably enhancing the speed and feasibility of large-scale simulations. Unlike existing neural methods, NeuralMAG concentrates on the core computation—rather than an end-to-end approximation for a specific task—making it inherently generalizable. To validate the new approach, we trained a single model and evaluated it on two micromagnetics tasks with various sample sizes, shapes and material settings. Many physical systems involve long-range interactions, which present a considerable obstacle to large-scale simulations. Cai, Li and Wang introduce NeuralMAG, a deep learning approach to reduce complexity and accelerate micromagnetic simulations.

利用深度神经网络进行快速、通用的微磁模拟

在磁存储设计的广泛应用推动下,微磁学取得了重要进展。数值模拟是微磁学研究的基石,它依赖于第一原理规则,利用以 Landau、Lifshitz 和 Gilbert 命名的著名的 Landau-Lifshitz-Gilbert 方程计算微磁系统的动态演化。然而,这些模拟往往因速度慢而受阻。虽然快速傅立叶变换计算能将计算复杂度降低到 O(Nlog(N)),但对于大规模仿真来说仍然不切实际。在此,我们介绍一种用于微磁模拟的深度学习方法--NeuralMAG。我们的方法遵循 Landau-Lifshitz-Gilbert 迭代框架,但通过采用 U 型神经网络来加速消磁场的计算。这种神经网络架构由一个编码器和一个解码器组成,编码器负责提取不同尺度的聚合自旋,并学习每个尺度的局部相互作用,解码器则负责累积不同尺度的局部相互作用,以近似全局卷积。这种 "分割-累积 "方案的时间复杂度为 O(N),显著提高了大规模模拟的速度和可行性。与现有的神经方法不同,NeuralMAG 专注于核心计算,而不是针对特定任务的端到端近似,因此具有内在的通用性。为了验证这种新方法,我们训练了一个单一模型,并在两个具有不同样本大小、形状和材料设置的微观磁学任务中对其进行了评估。

本文章由计算机程序翻译,如有差异,请以英文原文为准。

求助全文

约1分钟内获得全文

求助全文

来源期刊

Nature Machine Intelligence

Multiple-

CiteScore

36.90

自引率

2.10%

发文量

127

期刊介绍:

Nature Machine Intelligence is a distinguished publication that presents original research and reviews on various topics in machine learning, robotics, and AI. Our focus extends beyond these fields, exploring their profound impact on other scientific disciplines, as well as societal and industrial aspects. We recognize limitless possibilities wherein machine intelligence can augment human capabilities and knowledge in domains like scientific exploration, healthcare, medical diagnostics, and the creation of safe and sustainable cities, transportation, and agriculture. Simultaneously, we acknowledge the emergence of ethical, social, and legal concerns due to the rapid pace of advancements.

To foster interdisciplinary discussions on these far-reaching implications, Nature Machine Intelligence serves as a platform for dialogue facilitated through Comments, News Features, News & Views articles, and Correspondence. Our goal is to encourage a comprehensive examination of these subjects.

Similar to all Nature-branded journals, Nature Machine Intelligence operates under the guidance of a team of skilled editors. We adhere to a fair and rigorous peer-review process, ensuring high standards of copy-editing and production, swift publication, and editorial independence.

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: