Mamba-in-Mamba: Centralized Mamba-Cross-Scan in Tokenized Mamba Model for Hyperspectral image classification

IF 5.5

2区 计算机科学

Q1 COMPUTER SCIENCE, ARTIFICIAL INTELLIGENCE

引用次数: 0

Abstract

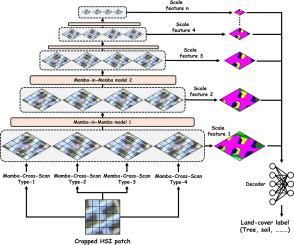

Hyperspectral image (HSI) classification plays a crucial role in remote sensing (RS) applications, enabling the precise identification of materials and land cover based on spectral information. This supports tasks such as agricultural management and urban planning. While sequential neural models like Recurrent Neural Networks (RNNs) and Transformers have been adapted for this task, they present limitations: RNNs struggle with feature aggregation and are sensitive to noise from interfering pixels, whereas Transformers require extensive computational resources and tend to underperform when HSI datasets contain limited or unbalanced training samples. To address these challenges, Mamba architectures have emerged, offering a balance between RNNs and Transformers by leveraging lightweight, parallel scanning capabilities. Although models like Vision Mamba (ViM) and Visual Mamba (VMamba) have demonstrated improvements in visual tasks, their application to HSI classification remains underexplored, particularly in handling land-cover semantic tokens and multi-scale feature aggregation for patch-wise classifiers. In response, this study introduces the Mamba-in-Mamba (MiM) architecture for HSI classification, marking a pioneering effort in this domain. The MiM model features: (1) a novel centralized Mamba-Cross-Scan (MCS) mechanism for efficient image-to-sequence data transformation; (2) a Tokenized Mamba (T-Mamba) encoder that incorporates a Gaussian Decay Mask (GDM), Semantic Token Learner (STL), and Semantic Token Fuser (STF) for enhanced feature generation; and (3) a Weighted MCS Fusion (WMF) module with a Multi-Scale Loss Design for improved training efficiency. Experimental results on four public HSI datasets—Indian Pines, Pavia University, Houston2013, and WHU-Hi-Honghu—demonstrate that our method achieves an overall accuracy improvement of up to 3.3%, 2.7%, 1.5%, and 2.3% over state-of-the-art approaches (i.e., SSFTT, MAEST, etc.) under both fixed and disjoint training-testing settings.

曼巴中的曼巴:用于高光谱图像分类的标记化曼巴模型中的集中式曼巴交叉扫描

高光谱图像(HSI)分类在遥感(RS)应用中发挥着至关重要的作用,可根据光谱信息精确识别物质和土地覆盖。这为农业管理和城市规划等任务提供了支持。虽然递归神经网络(RNN)和变形器等序列神经模型已被应用于这项任务,但它们仍存在局限性:RNN 在特征聚合方面很吃力,而且对干扰像素的噪声很敏感,而 Transformers 则需要大量的计算资源,而且在人机交互数据集包含有限或不平衡的训练样本时往往表现不佳。为了应对这些挑战,Mamba 架构应运而生,它利用轻量级并行扫描功能,在 RNN 和 Transformers 之间取得了平衡。虽然视觉 Mamba(ViM)和视觉 Mamba(VMamba)等模型在视觉任务中取得了改进,但它们在人机交互分类中的应用仍未得到充分探索,特别是在处理土地覆盖语义标记和用于片断分类器的多尺度特征聚合方面。为此,本研究引入了用于人机交互分类的 Mamba-in-Mamba (MiM) 架构,这标志着该领域的一项开创性工作。MiM 模型的特点是(1) 新型集中式 Mamba-Cross-Scan (MCS) 机制,可实现高效的图像到序列数据转换;(2) 标记化 Mamba (T-Mamba) 编码器,包含高斯衰减掩码 (GDM)、语义标记学习器 (STL) 和语义标记融合器 (STF),可增强特征生成;以及 (3) 加权 MCS 融合 (WMF) 模块,采用多尺度损失设计,可提高训练效率。在印度松树、帕维亚大学、Houston2013 和 WHU-Hi-Honghu 四个公共人机交互数据集上的实验结果表明,在固定和不连续的训练-测试设置下,我们的方法比最先进的方法(即 SSFTT、MAEST 等)分别提高了 3.3%、2.7%、1.5% 和 2.3%。

本文章由计算机程序翻译,如有差异,请以英文原文为准。

求助全文

约1分钟内获得全文

求助全文

来源期刊

Neurocomputing

工程技术-计算机:人工智能

CiteScore

13.10

自引率

10.00%

发文量

1382

审稿时长

70 days

期刊介绍:

Neurocomputing publishes articles describing recent fundamental contributions in the field of neurocomputing. Neurocomputing theory, practice and applications are the essential topics being covered.

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: