Advancing MRI segmentation with CLIP-driven semi-supervised learning and semantic alignment

IF 5.5

2区 计算机科学

Q1 COMPUTER SCIENCE, ARTIFICIAL INTELLIGENCE

引用次数: 0

Abstract

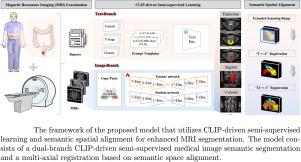

Precise segmentation and reconstruction of multi-structures within MRI are crucial for clinical applications such as surgical navigation. However, medical image segmentation faces several challenges. Although semi-supervised methods can reduce the annotation workload, they often suffer from limited robustness. To address this issue, this study proposes a novel CLIP-driven semi-supervised model, that includes two branches and a module. In the image branch, copy-paste is used as data augmentation method to enhance consistency learning. In the text branch, patient-level information is encoded via CLIP to drive the image branch. Notably, a novel cross-modal fusion module is designed to enhance the alignment and representation of text and image. Additionally, a semantic spatial alignment module is introduced to register segmentation results from different axial MRIs into a unified space. Three multi-modal datasets (one private and two public) were constructed to demonstrate the model’s performance. Compared to previous state-of-the-art methods, this model shows a significant advantage with both 5% and 10% labeled data. This study constructs a robust semi-supervised medical segmentation model, particularly effective in addressing label inconsistency and abnormal organ deformations. It also tackles the axial non-orthogonality challenges inherent in MRI, providing a consistent view of multi-structures.

利用 CLIP 驱动的半监督学习和语义对齐推进磁共振成像分割

磁共振成像中多结构的精确分割和重建对于手术导航等临床应用至关重要。然而,医学图像分割面临着一些挑战。虽然半监督方法可以减少标注工作量,但其鲁棒性往往有限。为解决这一问题,本研究提出了一种新颖的 CLIP 驱动的半监督模型,包括两个分支和一个模块。在图像分支中,复制粘贴被用作数据增强方法,以提高学习的一致性。在文本分支中,通过 CLIP 对患者层面的信息进行编码,从而驱动图像分支。值得注意的是,设计了一个新颖的跨模态融合模块,以增强文本和图像的对齐和表示。此外,还引入了一个语义空间配准模块,将不同轴向核磁共振成像的分割结果注册到一个统一的空间中。为了证明该模型的性能,我们构建了三个多模态数据集(一个私人数据集和两个公共数据集)。与之前最先进的方法相比,该模型在使用 5%和 10%的标记数据时均显示出显著优势。这项研究构建了一个稳健的半监督医疗分割模型,尤其是在解决标签不一致和器官异常变形方面非常有效。它还解决了核磁共振成像固有的轴向非正交性难题,提供了一致的多结构视图。

本文章由计算机程序翻译,如有差异,请以英文原文为准。

求助全文

约1分钟内获得全文

求助全文

来源期刊

Neurocomputing

工程技术-计算机:人工智能

CiteScore

13.10

自引率

10.00%

发文量

1382

审稿时长

70 days

期刊介绍:

Neurocomputing publishes articles describing recent fundamental contributions in the field of neurocomputing. Neurocomputing theory, practice and applications are the essential topics being covered.

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: