Results and implications for generative AI in a large introductory biomedical and health informatics course

IF 12.4

1区 医学

Q1 HEALTH CARE SCIENCES & SERVICES

引用次数: 0

Abstract

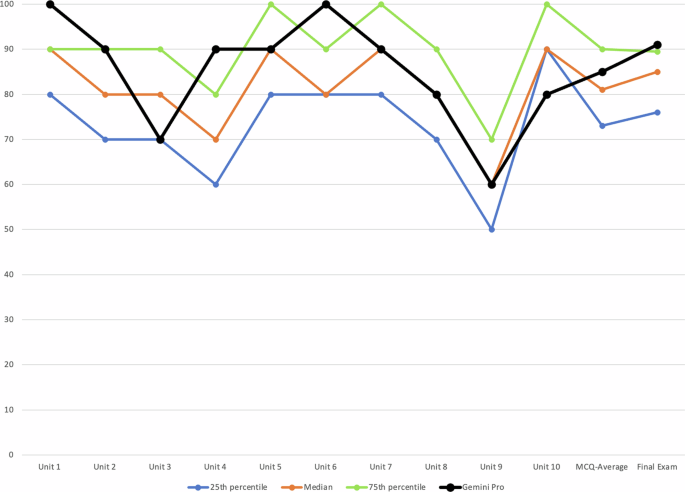

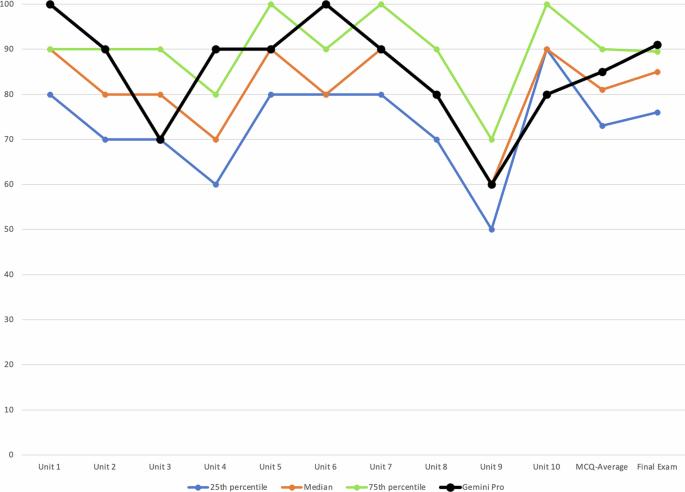

Generative artificial intelligence (AI) systems have performed well at many biomedical tasks, but few studies have assessed their performance directly compared to students in higher-education courses. We compared student knowledge-assessment scores with prompting of 6 large-language model (LLM) systems as they would be used by typical students in a large online introductory course in biomedical and health informatics that is taken by graduate, continuing education, and medical students. The state-of-the-art LLM systems were prompted to answer multiple-choice questions (MCQs) and final exam questions. We compared the scores for 139 students (30 graduate students, 85 continuing education students, and 24 medical students) to the LLM systems. All of the LLMs scored between the 50th and 75th percentiles of students for MCQ and final exam questions. The performance of LLMs raises questions about student assessment in higher education, especially in courses that are knowledge-based and online.

大型生物医学和健康信息学入门课程中生成式人工智能的结果和影响

生成式人工智能(AI)系统在许多生物医学任务中表现出色,但很少有研究将它们的表现直接与高等教育课程中的学生进行比较评估。我们比较了学生在 6 个大型语言模型(LLM)系统提示下的知识评估得分,因为这些系统会被研究生、继续教育和医科学生选修的生物医学和健康信息学大型在线入门课程中的典型学生使用。最先进的 LLM 系统被提示回答多项选择题(MCQ)和期末考试题。我们将 139 名学生(30 名研究生、85 名进修生和 24 名医学生)的分数与 LLM 系统进行了比较。在 MCQ 和期末考试题中,所有 LLM 的得分都在学生的第 50 和 75 百分位数之间。法学硕士的表现提出了高等教育中学生评估的问题,尤其是在以知识为基础的在线课程中。

本文章由计算机程序翻译,如有差异,请以英文原文为准。

求助全文

约1分钟内获得全文

求助全文

来源期刊

NPJ Digital Medicine

Multiple-

CiteScore

25.10

自引率

3.30%

发文量

170

审稿时长

15 weeks

期刊介绍:

npj Digital Medicine is an online open-access journal that focuses on publishing peer-reviewed research in the field of digital medicine. The journal covers various aspects of digital medicine, including the application and implementation of digital and mobile technologies in clinical settings, virtual healthcare, and the use of artificial intelligence and informatics.

The primary goal of the journal is to support innovation and the advancement of healthcare through the integration of new digital and mobile technologies. When determining if a manuscript is suitable for publication, the journal considers four important criteria: novelty, clinical relevance, scientific rigor, and digital innovation.

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: