Learning integral operators via neural integral equations

IF 18.8

1区 计算机科学

Q1 COMPUTER SCIENCE, ARTIFICIAL INTELLIGENCE

引用次数: 0

Abstract

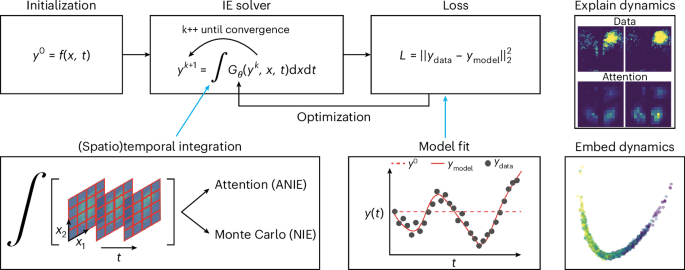

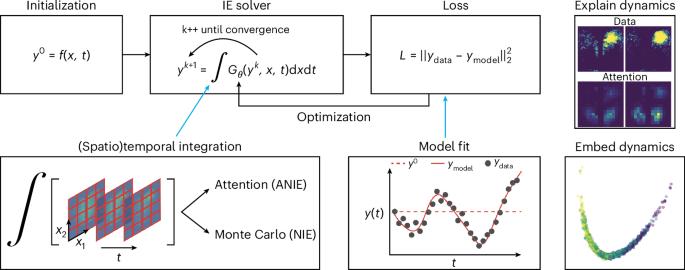

Nonlinear operators with long-distance spatiotemporal dependencies are fundamental in modelling complex systems across sciences; yet, learning these non-local operators remains challenging in machine learning. Integral equations, which model such non-local systems, have wide-ranging applications in physics, chemistry, biology and engineering. We introduce the neural integral equation, a method for learning unknown integral operators from data using an integral equation solver. To improve scalability and model capacity, we also present the attentional neural integral equation, which replaces the integral with self-attention. Both models are grounded in the theory of second-kind integral equations, where the indeterminate appears both inside and outside the integral operator. We provide a theoretical analysis showing how self-attention can approximate integral operators under mild regularity assumptions, further deepening previously reported connections between transformers and integration, as well as deriving corresponding approximation results for integral operators. Through numerical benchmarks on synthetic and real-world data, including Lotka–Volterra, Navier–Stokes and Burgers’ equations, as well as brain dynamics and integral equations, we showcase the models’ capabilities and their ability to derive interpretable dynamics embeddings. Our experiments demonstrate that attentional neural integral equations outperform existing methods, especially for longer time intervals and higher-dimensional problems. Our work addresses a critical gap in machine learning for non-local operators and offers a powerful tool for studying unknown complex systems with long-range dependencies. Integral equations are used in science and engineering to model complex systems with non-local dependencies; however, existing traditional and machine-learning-based methods cannot yield accurate or efficient solutions in several complex cases. Zappala and colleagues introduce a neural-network-based method that can learn an integral operator and its dynamics from data, demonstrating higher accuracy or scalability compared with several state-of-the-art methods.

通过神经积分方程学习积分算子

具有长距离时空依赖性的非线性算子是各科学领域复杂系统建模的基础;然而,学习这些非局部算子在机器学习中仍具有挑战性。积分方程是此类非局部系统的模型,在物理学、化学、生物学和工程学中有着广泛的应用。我们介绍了神经积分方程,这是一种利用积分方程求解器从数据中学习未知积分算子的方法。为了提高可扩展性和模型容量,我们还提出了注意力神经积分方程,用自我注意力取代积分。这两种模型都以第二类积分方程理论为基础,在第二类积分方程中,不定数既出现在积分算子内部,也出现在积分算子外部。我们提供的理论分析表明,在温和的正则性假设条件下,自我注意可以近似积分算子,进一步深化了之前报道的变换器与积分之间的联系,并推导出积分算子的相应近似结果。通过对合成数据和真实世界数据(包括洛特卡-伏特拉方程、纳维-斯托克斯方程、伯格斯方程以及脑动力学和积分方程)进行数值基准测试,我们展示了模型的能力及其推导可解释动力学嵌入的能力。我们的实验证明,注意力神经积分方程优于现有方法,尤其是在处理较长的时间间隔和较高维度的问题时。我们的工作解决了非局部算子机器学习中的一个关键缺口,为研究具有长程依赖性的未知复杂系统提供了一个强大的工具。

本文章由计算机程序翻译,如有差异,请以英文原文为准。

求助全文

约1分钟内获得全文

求助全文

来源期刊

Nature Machine Intelligence

Multiple-

CiteScore

36.90

自引率

2.10%

发文量

127

期刊介绍:

Nature Machine Intelligence is a distinguished publication that presents original research and reviews on various topics in machine learning, robotics, and AI. Our focus extends beyond these fields, exploring their profound impact on other scientific disciplines, as well as societal and industrial aspects. We recognize limitless possibilities wherein machine intelligence can augment human capabilities and knowledge in domains like scientific exploration, healthcare, medical diagnostics, and the creation of safe and sustainable cities, transportation, and agriculture. Simultaneously, we acknowledge the emergence of ethical, social, and legal concerns due to the rapid pace of advancements.

To foster interdisciplinary discussions on these far-reaching implications, Nature Machine Intelligence serves as a platform for dialogue facilitated through Comments, News Features, News & Views articles, and Correspondence. Our goal is to encourage a comprehensive examination of these subjects.

Similar to all Nature-branded journals, Nature Machine Intelligence operates under the guidance of a team of skilled editors. We adhere to a fair and rigorous peer-review process, ensuring high standards of copy-editing and production, swift publication, and editorial independence.

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: