Generalizability of electroencephalographic interpretation using artificial intelligence: An external validation study

Abstract

Objective

The automated interpretation of clinical electroencephalograms (EEGs) using artificial intelligence (AI) holds the potential to bridge the treatment gap in resource-limited settings and reduce the workload at specialized centers. However, to facilitate broad clinical implementation, it is essential to establish generalizability across diverse patient populations and equipment. We assessed whether SCORE-AI demonstrates diagnostic accuracy comparable to that of experts when applied to a geographically different patient population, recorded with distinct EEG equipment and technical settings.

Methods

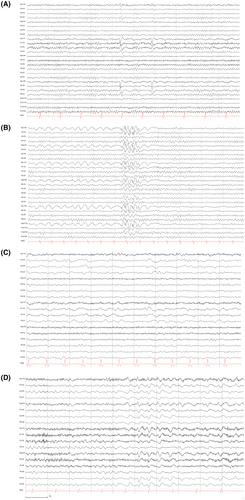

We assessed the diagnostic accuracy of a “fixed-and-frozen” AI model, using an independent dataset and external gold standard, and benchmarked it against three experts blinded to all other data. The dataset comprised 50% normal and 50% abnormal routine EEGs, equally distributed among the four major classes of EEG abnormalities (focal epileptiform, generalized epileptiform, focal nonepileptiform, and diffuse nonepileptiform). To assess diagnostic accuracy, we computed sensitivity, specificity, and accuracy of the AI model and the experts against the external gold standard.

Results

We analyzed EEGs from 104 patients (64 females, median age = 38.6 [range = 16–91] years). SCORE-AI performed equally well compared to the experts, with an overall accuracy of 92% (95% confidence interval [CI] = 90%–94%) versus 94% (95% CI = 92%–96%). There was no significant difference between SCORE-AI and the experts for any metric or category. SCORE-AI performed well independently of the vigilance state (false classification during awake: 5/41 [12.2%], false classification during sleep: 2/11 [18.2%]; p = .63) and normal variants (false classification in presence of normal variants: 4/14 [28.6%], false classification in absence of normal variants: 3/38 [7.9%]; p = .07).

Significance

SCORE-AI achieved diagnostic performance equal to human experts in an EEG dataset independent of the development dataset, in a geographically distinct patient population, recorded with different equipment and technical settings than the development dataset.

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: