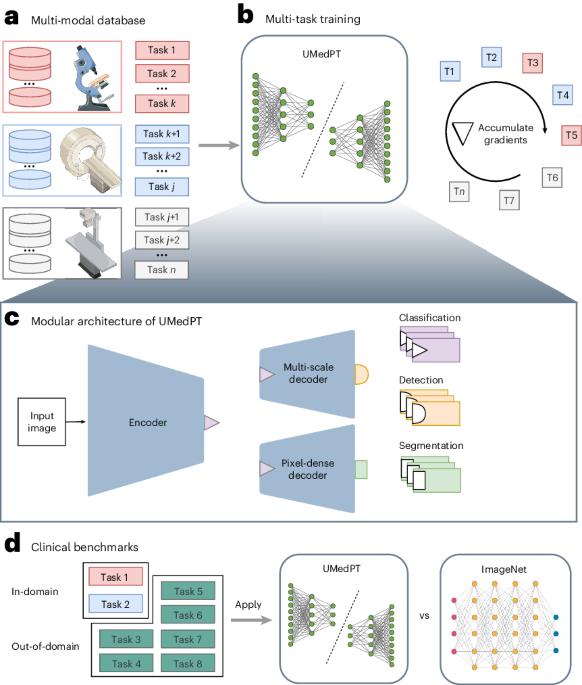

A multi-task learning strategy to pretrain models for medical image analysis

IF 12

Q1 COMPUTER SCIENCE, INTERDISCIPLINARY APPLICATIONS

引用次数: 0

Abstract

Pretraining powerful deep learning models requires large, comprehensive training datasets, which are often unavailable for medical imaging. In response, the universal biomedical pretrained (UMedPT) foundational model was developed based on multiple small and medium-sized datasets. This model reduced the amount of data required to learn new target tasks by at least 50%.

用于医学图像分析模型预训练的多任务学习策略。

对功能强大的深度学习模型进行预训练需要大型、全面的训练数据集,而医学影像通常无法获得这些数据集。为此,基于多个中小型数据集开发了通用生物医学预训练(UMedPT)基础模型。该模型将学习新目标任务所需的数据量减少了至少 50%。

本文章由计算机程序翻译,如有差异,请以英文原文为准。

求助全文

约1分钟内获得全文

求助全文

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: