How Deep Neural Networks Learn Compositional Data: The Random Hierarchy Model

IF 11.6

1区 物理与天体物理

Q1 PHYSICS, MULTIDISCIPLINARY

引用次数: 0

Abstract

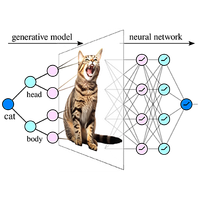

Deep learning algorithms demonstrate a surprising ability to learn high-dimensional tasks from limited examples. This is commonly attributed to the depth of neural networks, enabling them to build a hierarchy of abstract, low-dimensional data representations. However, how many training examples are required to learn such representations remains unknown. To quantitatively study this question, we introduce the random hierarchy model: a family of synthetic tasks inspired by the hierarchical structure of language and images. The model is a classification task where each class corresponds to a group of high-level features, chosen among several equivalent groups associated with the same class. In turn, each feature corresponds to a group of subfeatures chosen among several equivalent groups and so on, following a hierarchy of composition rules. We find that deep networks learn the task by developing internal representations invariant to exchanging equivalent groups. Moreover, the number of data required corresponds to the point where correlations between low-level features and classes become detectable. Overall, our results indicate how deep networks overcome the curse of dimensionality by building invariant representations and provide an estimate of the number of data required to learn a hierarchical task.

深度神经网络如何学习合成数据:随机层次模型

深度学习算法在从有限的示例中学习高维任务方面表现出了惊人的能力。这通常归功于神经网络的深度,使其能够建立抽象的低维数据表示层次。然而,需要多少训练示例才能学习到这种表征仍然是个未知数。为了对这一问题进行定量研究,我们引入了随机层次模型:这是受语言和图像的层次结构启发而产生的一系列合成任务。该模型是一种分类任务,其中每个类别对应一组高级特征,这些特征是从与同一类别相关的多个等价组中选择的。反过来,每个特征又对应一组子特征,这组子特征从多个等价组中选择,依此类推,遵循层次结构的组成规则。我们发现,深度网络通过开发不随等效组交换而变化的内部表征来学习任务。此外,所需的数据数量与低级特征和类别之间的相关性变得可检测的点相对应。总之,我们的研究结果表明了深度网络是如何通过建立不变表征来克服维度诅咒的,并提供了学习分层任务所需数据数量的估计值。

本文章由计算机程序翻译,如有差异,请以英文原文为准。

求助全文

约1分钟内获得全文

求助全文

来源期刊

Physical Review X

PHYSICS, MULTIDISCIPLINARY-

CiteScore

24.60

自引率

1.60%

发文量

197

审稿时长

3 months

期刊介绍:

Physical Review X (PRX) stands as an exclusively online, fully open-access journal, emphasizing innovation, quality, and enduring impact in the scientific content it disseminates. Devoted to showcasing a curated selection of papers from pure, applied, and interdisciplinary physics, PRX aims to feature work with the potential to shape current and future research while leaving a lasting and profound impact in their respective fields. Encompassing the entire spectrum of physics subject areas, PRX places a special focus on groundbreaking interdisciplinary research with broad-reaching influence.

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: