Distributed constrained combinatorial optimization leveraging hypergraph neural networks

IF 18.8

1区 计算机科学

Q1 COMPUTER SCIENCE, ARTIFICIAL INTELLIGENCE

引用次数: 0

Abstract

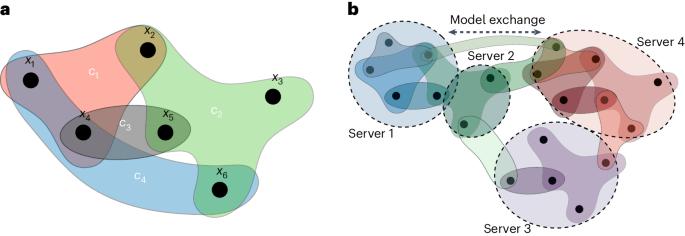

Scalable addressing of high-dimensional constrained combinatorial optimization problems is a challenge that arises in several science and engineering disciplines. Recent work introduced novel applications of graph neural networks for solving quadratic-cost combinatorial optimization problems. However, effective utilization of models such as graph neural networks to address general problems with higher-order constraints is an unresolved challenge. This paper presents a framework, HypOp, that advances the state of the art for solving combinatorial optimization problems in several aspects: (1) it generalizes the prior results to higher-order constrained problems with arbitrary cost functions by leveraging hypergraph neural networks; (2) it enables scalability to larger problems by introducing a new distributed and parallel training architecture; (3) it demonstrates generalizability across different problem formulations by transferring knowledge within the same hypergraph; (4) it substantially boosts the solution accuracy compared with the prior art by suggesting a fine-tuning step using simulated annealing; and (5) it shows remarkable progress on numerous benchmark examples, including hypergraph MaxCut, satisfiability and resource allocation problems, with notable run-time improvements using a combination of fine-tuning and distributed training techniques. We showcase the application of HypOp in scientific discovery by solving a hypergraph MaxCut problem on a National Drug Code drug-substance hypergraph. Through extensive experimentation on various optimization problems, HypOp demonstrates superiority over existing unsupervised-learning-based solvers and generic optimization methods. Bolstering the broad and deep applicability of graph neural networks, Heydaribeni et al. introduce HypOp, a framework that uses hypergraph neural networks to solve general constrained combinatorial optimization problems. The presented method scales and generalizes well, improves accuracy and outperforms existing solvers on various benchmarking examples.

利用超图神经网络进行分布式约束组合优化

可扩展地解决高维约束组合优化问题是多个科学和工程学科面临的挑战。最近的工作介绍了图神经网络在解决二次成本组合优化问题中的新应用。然而,如何有效利用图神经网络等模型来解决具有高阶约束的一般问题是一个尚未解决的难题。本文提出了一个名为 HypOp 的框架,从几个方面推进了组合优化问题的解决技术:(1) 它利用超图神经网络,将先前的成果推广到具有任意成本函数的高阶约束问题;(2) 它通过引入新的分布式并行训练架构,实现了对更大问题的可扩展性;(3) 它通过在同一超图中传递知识,展示了在不同问题表述中的通用性;(4) 通过建议使用模拟退火进行微调步骤,与现有技术相比,它大大提高了求解的准确性;以及 (5) 它在众多基准示例(包括超图 MaxCut、可满足性和资源分配问题)上取得了显著进展,结合使用微调和分布式训练技术,在运行时间上有了明显改善。我们通过解决国家药品编码药物物质超图上的超图 MaxCut 问题,展示了 HypOp 在科学发现领域的应用。通过对各种优化问题的广泛实验,HypOp 证明了其优于现有的基于无监督学习的求解器和通用优化方法。

本文章由计算机程序翻译,如有差异,请以英文原文为准。

求助全文

约1分钟内获得全文

求助全文

来源期刊

Nature Machine Intelligence

Multiple-

CiteScore

36.90

自引率

2.10%

发文量

127

期刊介绍:

Nature Machine Intelligence is a distinguished publication that presents original research and reviews on various topics in machine learning, robotics, and AI. Our focus extends beyond these fields, exploring their profound impact on other scientific disciplines, as well as societal and industrial aspects. We recognize limitless possibilities wherein machine intelligence can augment human capabilities and knowledge in domains like scientific exploration, healthcare, medical diagnostics, and the creation of safe and sustainable cities, transportation, and agriculture. Simultaneously, we acknowledge the emergence of ethical, social, and legal concerns due to the rapid pace of advancements.

To foster interdisciplinary discussions on these far-reaching implications, Nature Machine Intelligence serves as a platform for dialogue facilitated through Comments, News Features, News & Views articles, and Correspondence. Our goal is to encourage a comprehensive examination of these subjects.

Similar to all Nature-branded journals, Nature Machine Intelligence operates under the guidance of a team of skilled editors. We adhere to a fair and rigorous peer-review process, ensuring high standards of copy-editing and production, swift publication, and editorial independence.

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: