Exploration-based model learning with self-attention for risk-sensitive robot control

引用次数: 0

Abstract

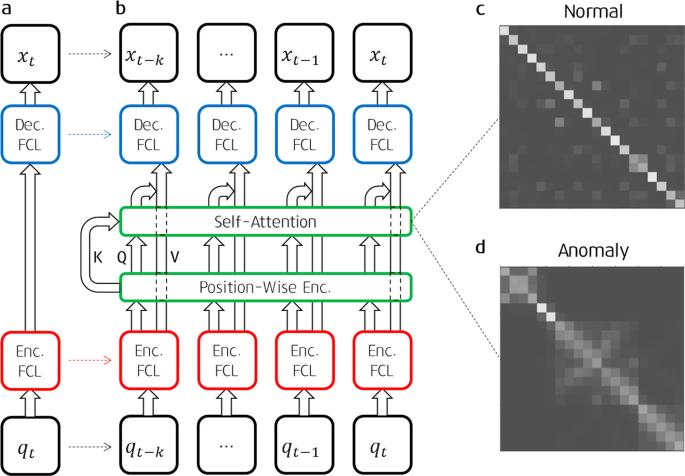

Model-based reinforcement learning for robot control offers the advantages of overcoming concerns on data collection and iterative processes for policy improvement in model-free methods. However, both methods use exploration strategy relying on heuristics that involve inherent randomness, which may cause instability or malfunction of the target system and render the system susceptible to external perturbations. In this paper, we propose an online model update algorithm that can be directly operated in real-world robot systems. The algorithm leverages a self-attention mechanism embedded in neural networks for the kinematics and the dynamics models of the target system. The approximated model involves redundant self-attention paths to the time-independent kinematics and dynamics models, allowing us to detect abnormalities by calculating the trace values of the self-attention matrices. This approach reduces the randomness during the exploration process and enables the detection and rejection of detected perturbations while updating the model. We validate the proposed method in simulation and with real-world robot systems in three application scenarios: path tracking of a soft robotic manipulator, kinesthetic teaching and behavior cloning of an industrial robotic arm, and gait generation of a legged robot. All of these demonstrations are achieved without the aid of simulation or prior knowledge of the models, which supports the proposed method’s universality for various robotics applications.

基于探索的模型学习与自我关注,实现风险敏感型机器人控制

基于模型的机器人控制强化学习具有克服无模型方法中的数据收集和策略改进迭代过程等问题的优势。然而,这两种方法都使用依赖于启发式的探索策略,涉及固有的随机性,这可能会导致目标系统的不稳定或故障,并使系统易受外部扰动的影响。在本文中,我们提出了一种在线模型更新算法,可直接在真实世界的机器人系统中运行。该算法利用神经网络中嵌入的自我注意机制来建立目标系统的运动学和动力学模型。近似模型包括与时间无关的运动学和动力学模型的冗余自注意路径,使我们能够通过计算自注意矩阵的迹值来检测异常。这种方法减少了探索过程中的随机性,并能在更新模型的同时检测和剔除检测到的扰动。我们在三个应用场景中模拟并使用真实世界的机器人系统验证了所提出的方法:软体机器人机械手的路径跟踪、工业机械臂的动觉教学和行为克隆,以及腿部机器人的步态生成。所有这些演示都是在不借助模拟或事先了解模型的情况下实现的,这证明了所提出的方法在各种机器人应用中的普遍性。

本文章由计算机程序翻译,如有差异,请以英文原文为准。

求助全文

约1分钟内获得全文

求助全文

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: