Random-reshuffled SARAH does not need full gradient computations

IF 1.1

4区 数学

Q2 MATHEMATICS, APPLIED

引用次数: 0

Abstract

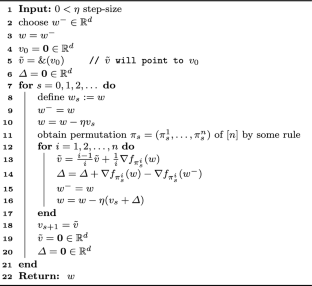

The StochAstic Recursive grAdient algoritHm (SARAH) algorithm is a variance reduced variant of the Stochastic Gradient Descent algorithm that needs a gradient of the objective function from time to time. In this paper, we remove the necessity of a full gradient computation. This is achieved by using a randomized reshuffling strategy and aggregating stochastic gradients obtained in each epoch. The aggregated stochastic gradients serve as an estimate of a full gradient in the SARAH algorithm. We provide a theoretical analysis of the proposed approach and conclude the paper with numerical experiments that demonstrate the efficiency of this approach.

随机消隐 SARAH 不需要完整梯度计算

随机递归梯度算法(SARAH)是随机梯度下降算法的一个变种,它需要不时地计算目标函数的梯度。在本文中,我们不再需要完整的梯度计算。为此,我们采用了随机重新洗牌策略,并对每个时间段内获得的随机梯度进行汇总。在 SARAH 算法中,汇总的随机梯度可作为完整梯度的估计值。我们对所提出的方法进行了理论分析,最后通过数值实验证明了这种方法的效率。

本文章由计算机程序翻译,如有差异,请以英文原文为准。

求助全文

约1分钟内获得全文

求助全文

来源期刊

Optimization Letters

管理科学-应用数学

CiteScore

3.40

自引率

6.20%

发文量

116

审稿时长

9 months

期刊介绍:

Optimization Letters is an international journal covering all aspects of optimization, including theory, algorithms, computational studies, and applications, and providing an outlet for rapid publication of short communications in the field. Originality, significance, quality and clarity are the essential criteria for choosing the material to be published.

Optimization Letters has been expanding in all directions at an astonishing rate during the last few decades. New algorithmic and theoretical techniques have been developed, the diffusion into other disciplines has proceeded at a rapid pace, and our knowledge of all aspects of the field has grown even more profound. At the same time one of the most striking trends in optimization is the constantly increasing interdisciplinary nature of the field.

Optimization Letters aims to communicate in a timely fashion all recent developments in optimization with concise short articles (limited to a total of ten journal pages). Such concise articles will be easily accessible by readers working in any aspects of optimization and wish to be informed of recent developments.

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: