More human than human: measuring ChatGPT political bias

IF 2.2

3区 经济学

Q2 ECONOMICS

引用次数: 4

Abstract

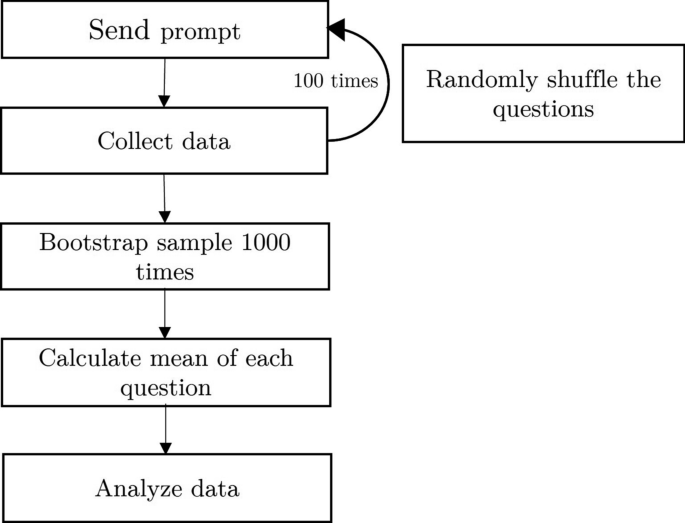

Abstract We investigate the political bias of a large language model (LLM), ChatGPT, which has become popular for retrieving factual information and generating content. Although ChatGPT assures that it is impartial, the literature suggests that LLMs exhibit bias involving race, gender, religion, and political orientation. Political bias in LLMs can have adverse political and electoral consequences similar to bias from traditional and social media. Moreover, political bias can be harder to detect and eradicate than gender or racial bias. We propose a novel empirical design to infer whether ChatGPT has political biases by requesting it to impersonate someone from a given side of the political spectrum and comparing these answers with its default. We also propose dose-response, placebo, and profession-politics alignment robustness tests. To reduce concerns about the randomness of the generated text, we collect answers to the same questions 100 times, with question order randomized on each round. We find robust evidence that ChatGPT presents a significant and systematic political bias toward the Democrats in the US, Lula in Brazil, and the Labour Party in the UK. These results translate into real concerns that ChatGPT, and LLMs in general, can extend or even amplify the existing challenges involving political processes posed by the Internet and social media. Our findings have important implications for policymakers, media, politics, and academia stakeholders.

比人类更人性化:衡量ChatGPT的政治偏见

摘要:我们研究了一个大型语言模型(LLM)的政治偏见,ChatGPT在检索事实信息和生成内容方面已经变得很流行。尽管ChatGPT保证它是公正的,但文献表明法学硕士表现出涉及种族、性别、宗教和政治取向的偏见。法学硕士的政治偏见可能会产生不利的政治和选举后果,类似于传统和社交媒体的偏见。此外,政治偏见可能比性别或种族偏见更难发现和根除。我们提出了一种新的经验设计,通过要求ChatGPT模拟来自政治光谱给定一方的人,并将这些答案与默认答案进行比较,来推断ChatGPT是否有政治偏见。我们还提出了剂量-反应、安慰剂和职业-政治一致性稳健性检验。为了减少对生成文本随机性的担忧,我们收集了100次相同问题的答案,每轮的问题顺序是随机的。我们发现强有力的证据表明,ChatGPT对美国的民主党、巴西的卢拉和英国的工党表现出显著的、系统性的政治偏见。这些结果转化为真正的担忧,即ChatGPT和一般的法学硕士可以扩展甚至放大互联网和社交媒体带来的涉及政治进程的现有挑战。我们的研究结果对政策制定者、媒体、政治和学术界利益相关者具有重要意义。

本文章由计算机程序翻译,如有差异,请以英文原文为准。

求助全文

约1分钟内获得全文

求助全文

来源期刊

Public Choice

Multiple-

CiteScore

3.60

自引率

18.80%

发文量

65

期刊介绍:

Public Choice deals with the intersection between economics and political science. The journal was founded at a time when economists and political scientists became interested in the application of essentially economic methods to problems normally dealt with by political scientists. It has always retained strong traces of economic methodology, but new and fruitful techniques have been developed which are not recognizable by economists. Public Choice therefore remains central in its chosen role of introducing the two groups to each other, and allowing them to explain themselves through the medium of its pages.

Officially cited as: Public Choice

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: