PepperOSC: enabling interactive sonification of a robot’s expressive movement

IF 2.1

3区 计算机科学

Q3 COMPUTER SCIENCE, ARTIFICIAL INTELLIGENCE

引用次数: 1

Abstract

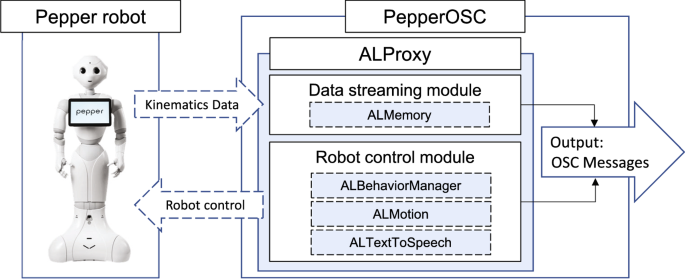

Abstract This paper presents the design and development of PepperOSC, an interface that connects Pepper and NAO robots with sound production tools to enable the development of interactive sonification in human-robot interaction (HRI). The interface uses Open Sound Control (OSC) messages to stream kinematic data from robots to various sound design and music production tools. The goals of PepperOSC are twofold: (i) to provide a tool for HRI researchers in developing multimodal user interfaces through sonification, and (ii) to lower the barrier for sound designers to contribute to HRI. To demonstrate the potential use of PepperOSC, this paper also presents two applications we have conducted: (i) a course project by two master’s students who created a robot sound model in Pure Data, and (ii) a museum installation of Pepper robot, employing sound models developed by a sound designer and a composer/researcher in music technology using MaxMSP and SuperCollider respectively. Furthermore, we discuss the potential use cases of PepperOSC in social robotics and artistic contexts. These applications demonstrate the versatility of PepperOSC and its ability to explore diverse aesthetic strategies for robot movement sonification, offering a promising approach to enhance the effectiveness and appeal of human-robot interactions.

PepperOSC:使机器人的表达运动的交互式声音化

摘要:本文介绍了PepperOSC的设计和开发,该接口将Pepper和NAO机器人与声音制作工具连接起来,以实现人机交互(HRI)中的交互式声效开发。该接口使用开放声音控制(OSC)消息将机器人的运动数据流式传输到各种声音设计和音乐制作工具。PepperOSC的目标是双重的:(i)为HRI研究人员提供一个工具,通过声音来开发多模态用户界面,(ii)降低声音设计师为HRI做出贡献的障碍。为了展示PepperOSC的潜在用途,本文还介绍了我们进行的两个应用:(i)两个硕士生的课程项目,他们在Pure Data中创建了一个机器人声音模型,以及(ii)一个胡椒机器人的博物馆装置,使用声音设计师和音乐技术作曲家/研究员分别使用MaxMSP和SuperCollider开发的声音模型。此外,我们还讨论了PepperOSC在社交机器人和艺术环境中的潜在用例。这些应用程序展示了PepperOSC的多功能性及其探索机器人运动声音的不同美学策略的能力,提供了一种有前途的方法来提高人机交互的有效性和吸引力。

本文章由计算机程序翻译,如有差异,请以英文原文为准。

求助全文

约1分钟内获得全文

求助全文

来源期刊

Journal on Multimodal User Interfaces

COMPUTER SCIENCE, ARTIFICIAL INTELLIGENCE-COMPUTER SCIENCE, CYBERNETICS

CiteScore

6.90

自引率

3.40%

发文量

12

审稿时长

>12 weeks

期刊介绍:

The Journal of Multimodal User Interfaces publishes work in the design, implementation and evaluation of multimodal interfaces. Research in the domain of multimodal interaction is by its very essence a multidisciplinary area involving several fields including signal processing, human-machine interaction, computer science, cognitive science and ergonomics. This journal focuses on multimodal interfaces involving advanced modalities, several modalities and their fusion, user-centric design, usability and architectural considerations. Use cases and descriptions of specific application areas are welcome including for example e-learning, assistance, serious games, affective and social computing, interaction with avatars and robots.

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: