Face2Gesture: Translating Facial Expressions Into Robot Movements Through Shared Latent Space Neural Networks

IF 5.5

Q2 ROBOTICS

引用次数: 0

Abstract

In this work, we present a method for personalizing human-robot interaction by using emotive facial expressions to generate affective robot movements. Movement is an important medium for robots to communicate affective states, but the expertise and time required to craft new robot movements promotes a reliance on fixed preprogrammed behaviors. Enabling robots to respond to multimodal user input with newly generated movements could stave off staleness of interaction and convey a deeper degree of affective understanding than current retrieval-based methods. We use autoencoder neural networks to compress robot movement data and facial expression images into a shared latent embedding space. Then, we use a reconstruction loss to generate movements from these embeddings and triplet loss to align the embeddings by emotion classes rather than data modality. To subjectively evaluate our method, we conducted a user survey and found that generated happy and sad movements could be matched to their source face images. However, angry movements were most often mismatched to sad images. This multimodal data-driven generative method can expand an interactive agent’s behavior library and could be adopted for other multimodal affective applications.Face2Gesture:通过共享潜在空间神经网络将面部表情转化为机器人动作

在这项工作中,我们提出了一种通过使用情感面部表情来产生情感机器人动作的个性化人机交互方法。运动是机器人交流情感状态的重要媒介,但制作新的机器人运动所需的专业知识和时间促进了对固定预编程行为的依赖。使机器人能够用新生成的动作响应多模态用户输入,可以避免交互的陈旧,并传达比当前基于检索的方法更深程度的情感理解。我们使用自编码器神经网络将机器人运动数据和面部表情图像压缩到一个共享的潜在嵌入空间中。然后,我们使用重建损失来从这些嵌入中生成运动,并使用三重损失来根据情感类别而不是数据模式对齐嵌入。为了主观地评价我们的方法,我们对用户进行了调查,发现生成的快乐和悲伤的动作可以与他们的源面部图像相匹配。然而,愤怒的动作通常与悲伤的图像不匹配。这种多模态数据驱动生成方法可以扩展交互式智能体的行为库,并可用于其他多模态情感应用。

本文章由计算机程序翻译,如有差异,请以英文原文为准。

求助全文

约1分钟内获得全文

求助全文

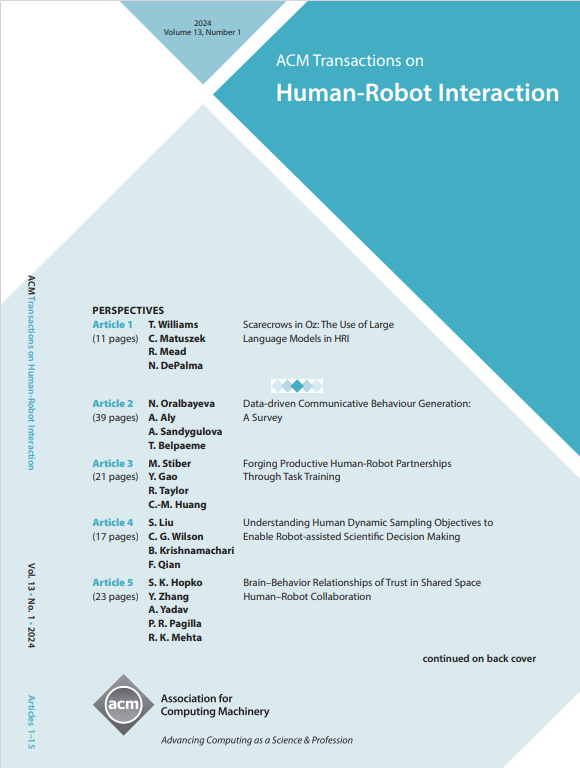

来源期刊

ACM Transactions on Human-Robot Interaction

Computer Science-Artificial Intelligence

CiteScore

7.70

自引率

5.90%

发文量

65

期刊介绍:

ACM Transactions on Human-Robot Interaction (THRI) is a prestigious Gold Open Access journal that aspires to lead the field of human-robot interaction as a top-tier, peer-reviewed, interdisciplinary publication. The journal prioritizes articles that significantly contribute to the current state of the art, enhance overall knowledge, have a broad appeal, and are accessible to a diverse audience. Submissions are expected to meet a high scholarly standard, and authors are encouraged to ensure their research is well-presented, advancing the understanding of human-robot interaction, adding cutting-edge or general insights to the field, or challenging current perspectives in this research domain.

THRI warmly invites well-crafted paper submissions from a variety of disciplines, encompassing robotics, computer science, engineering, design, and the behavioral and social sciences. The scholarly articles published in THRI may cover a range of topics such as the nature of human interactions with robots and robotic technologies, methods to enhance or enable novel forms of interaction, and the societal or organizational impacts of these interactions. The editorial team is also keen on receiving proposals for special issues that focus on specific technical challenges or that apply human-robot interaction research to further areas like social computing, consumer behavior, health, and education.

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: