A deep learning aided differential distinguisher improvement framework with more lightweight and universality

IF 3.9

4区 计算机科学

Q2 COMPUTER SCIENCE, INFORMATION SYSTEMS

引用次数: 0

Abstract

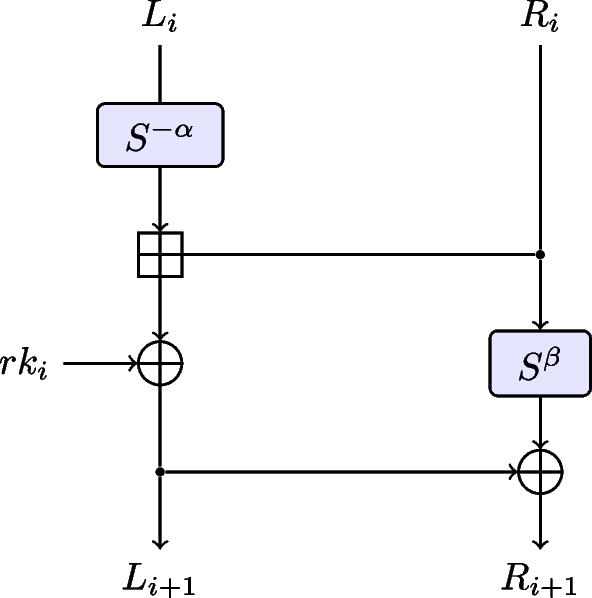

Abstract In CRYPTO 2019, Gohr opens up a new direction for cryptanalysis. He successfully applied deep learning to differential cryptanalysis against the NSA block cipher SPECK32/64, achieving higher accuracy than traditional differential distinguishers. Until now, one of the mainstream research directions is increasing the training sample size and utilizing different neural networks to improve the accuracy of neural distinguishers. This conversion mindset may lead to a huge number of parameters, heavy computing load, and a large number of memory in the distinguishers training process. However, in the practical application of cryptanalysis, the applicability of the attacks method in a resource-constrained environment is very important. Therefore, we focus on the cost optimization and aim to reduce network parameters for differential neural cryptanalysis.In this paper, we propose two cost-optimized neural distinguisher improvement methods from the aspect of data format and network structure, respectively. Firstly, we obtain a partial output difference neural distinguisher using only 4-bits training data format which is constructed with a new advantage bits search algorithm based on two key improvement conditions. In addition, we perform an interpretability analysis of the new neural distinguishers whose results are mainly reflected in the relationship between the neural distinguishers, truncated differential, and advantage bits. Secondly, we replace the traditional convolution with the depthwise separable convolution to reduce the training cost without affecting the accuracy as much as possible. Overall, the number of training parameters can be reduced by less than 50% by using our new network structure for training neural distinguishers. Finally, we apply the network structure to the partial output difference neural distinguishers. The combinatorial approach have led to a further reduction in the number of parameters (approximately 30% of Gohr’s distinguishers for SPECK).

一种轻量级、通用性强的深度学习辅助差分区分器改进框架

在CRYPTO 2019中,Gohr为密码分析开辟了新的方向。他成功地将深度学习应用于针对NSA分组密码SPECK32/64的差分密码分析,获得了比传统差分区分器更高的精度。到目前为止,主流的研究方向之一是增加训练样本量,利用不同的神经网络来提高神经分类器的准确率。这种转换思维可能会导致区分器训练过程中参数数量庞大,计算量大,内存量大。然而,在密码分析的实际应用中,攻击方法在资源受限环境中的适用性是非常重要的。因此,我们将重点放在成本优化上,旨在减少差分神经密码分析的网络参数。本文分别从数据格式和网络结构两方面提出了两种成本优化的神经区分器改进方法。首先,利用基于两个关键改进条件的优势位搜索算法构造了仅使用4位训练数据格式的部分输出差分神经鉴别器;此外,我们还对新的神经区分符进行了可解释性分析,其结果主要体现在神经区分符、截断微分和优势位之间的关系上。其次,我们用深度可分卷积代替传统的卷积,在不影响准确率的前提下尽可能降低训练成本。总的来说,使用我们的新网络结构来训练神经区分器,训练参数的数量可以减少不到50%。最后,我们将网络结构应用于部分输出差分神经区分器。组合方法进一步减少了参数的数量(约占SPECK的Gohr区分符的30%)。

本文章由计算机程序翻译,如有差异,请以英文原文为准。

求助全文

约1分钟内获得全文

求助全文

来源期刊

Cybersecurity

Computer Science-Information Systems

CiteScore

7.30

自引率

0.00%

发文量

77

审稿时长

9 weeks

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: