A survey and study impact of tweet sentiment analysis via transfer learning in low resource scenarios

IF 1.8

3区 计算机科学

Q3 COMPUTER SCIENCE, INTERDISCIPLINARY APPLICATIONS

引用次数: 1

Abstract

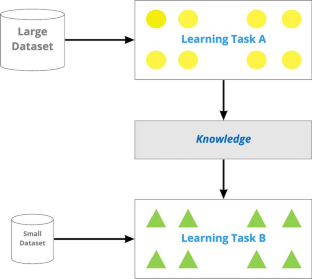

Sentiment analysis (SA) is a study area focused on obtaining contextual polarity from the text. Currently, deep learning has obtained outstanding results in this task. However, much annotated data are necessary to train these algorithms, and obtaining this data is expensive and difficult. In the context of low-resource scenarios, this problem is even more significant because there are little available data. Transfer learning (TL) can be used to minimize this problem because it is possible to develop some architectures using fewer data. Language models are a way of applying TL in natural language processing (NLP), and they have achieved competitive results. Nevertheless, some models need many hours of training using many computational resources, and in some contexts, people and organizations do not have the resources to do this. In this paper, we explore the models BERT (Pretraining of Deep Bidirectional Transformers for Language Understanding), MultiFiT (Efficient Multilingual Language Model Fine-tuning), ALBERT (A Lite BERT for Self-supervised Learning of Language Representations), and RoBERTa (A Robustly Optimized BERT Pretraining Approach). In all of our experiments, these models obtain better results than CNN (convolutional neural network) and LSTM (Long Short Term Memory) models. To MultiFiT and RoBERTa models, we propose a pretrained language model (PTLM) using Twitter data. Using this approach, we obtained competitive results compared with the models trained in formal language datasets. The main goal is to show the impacts of TL and language models comparing results with other techniques and showing the computational costs of using these approaches.

低资源情境下迁移学习对推文情感分析影响的调查研究

情感分析(SA)是研究从文本中获取语境极性的一个研究领域。目前,深度学习在这项任务中取得了突出的成果。然而,训练这些算法需要大量带注释的数据,并且获取这些数据既昂贵又困难。在资源匮乏的情况下,这个问题更加严重,因为可用的数据很少。迁移学习(TL)可以用来最小化这个问题,因为可以使用更少的数据开发一些架构。语言模型是语言学习在自然语言处理(NLP)中应用的一种方法,并取得了较好的成果。然而,一些模型需要使用许多计算资源进行许多小时的训练,并且在某些上下文中,个人和组织没有这样的资源。在本文中,我们探讨了BERT(用于语言理解的深度双向变形预训练)、MultiFiT(高效多语言模型微调)、ALBERT(用于语言表示的自监督学习的生活BERT)和RoBERTa(一种鲁棒优化的BERT预训练方法)模型。在我们所有的实验中,这些模型都获得了比CNN(卷积神经网络)和LSTM(长短期记忆)模型更好的结果。对于MultiFiT和RoBERTa模型,我们提出了一个使用Twitter数据的预训练语言模型(PTLM)。使用这种方法,与在正式语言数据集中训练的模型相比,我们获得了具有竞争力的结果。主要目标是展示TL和语言模型的影响,将结果与其他技术进行比较,并展示使用这些方法的计算成本。

本文章由计算机程序翻译,如有差异,请以英文原文为准。

求助全文

约1分钟内获得全文

求助全文

来源期刊

Language Resources and Evaluation

工程技术-计算机:跨学科应用

CiteScore

6.50

自引率

3.70%

发文量

55

审稿时长

>12 weeks

期刊介绍:

Language Resources and Evaluation is the first publication devoted to the acquisition, creation, annotation, and use of language resources, together with methods for evaluation of resources, technologies, and applications.

Language resources include language data and descriptions in machine readable form used to assist and augment language processing applications, such as written or spoken corpora and lexica, multimodal resources, grammars, terminology or domain specific databases and dictionaries, ontologies, multimedia databases, etc., as well as basic software tools for their acquisition, preparation, annotation, management, customization, and use.

Evaluation of language resources concerns assessing the state-of-the-art for a given technology, comparing different approaches to a given problem, assessing the availability of resources and technologies for a given application, benchmarking, and assessing system usability and user satisfaction.

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: