Multi-modal broad learning for material recognition

IF 1.3

Q4 COMPUTER SCIENCE, ARTIFICIAL INTELLIGENCE

引用次数: 3

Abstract

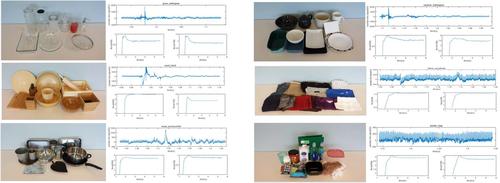

Joint Fund of Science & Technology Department of Liaoning Province and State Key Laboratory of Robotics, China, Grant/Award Number: 2020‐KF‐ 22‐06 Abstract Material recognition plays an important role in the interaction between robots and the external environment. For example, household service robots need to replace humans in the home environment to complete housework, so they need to interact with daily necessities and obtain their material performance. Images provide rich visual information about objects; however, it is often difficult to apply when objects are not visually distinct. In addition, tactile signals can be used to capture multiple characteristics of objects, such as texture, roughness, softness, and friction, which provides another crucial way for perception. How to effectively integrate multi‐modal information is an urgent problem to be addressed. Therefore, a multi‐modal material recognition framework CFBRL‐KCCA for target recognition tasks is proposed in the paper. The preliminary features of each model are extracted by cascading broad learning, which is combined with the kernel canonical correlation learning, considering the differences among different models of heterogeneous data. Finally, the open dataset of household objects is evaluated. The results demonstrate that the proposed fusion algorithm provides an effective strategy for material recognition.

材料识别的多模态广义学习

材料识别在机器人与外界环境的交互中起着重要的作用。例如,家庭服务机器人需要在家庭环境中代替人类完成家务,因此需要与生活用品进行交互,获取其物质性能。图像提供了关于物体的丰富视觉信息;然而,当对象在视觉上不明显时,通常很难应用。此外,触觉信号可以用来捕捉物体的多种特征,如纹理、粗糙度、柔软度和摩擦度,这为感知提供了另一种重要途径。如何有效地整合多模态信息是一个亟待解决的问题。为此,本文提出了一种用于目标识别任务的多模态材料识别框架CFBRL-KCCA。考虑到异构数据的不同模型之间的差异,采用级联广义学习和核典型相关学习相结合的方法提取每个模型的初步特征。最后,对家庭对象开放数据集进行评估。结果表明,该融合算法为材料识别提供了一种有效的策略。

本文章由计算机程序翻译,如有差异,请以英文原文为准。

求助全文

约1分钟内获得全文

求助全文

来源期刊

Cognitive Computation and Systems

Computer Science-Computer Science Applications

CiteScore

2.50

自引率

0.00%

发文量

39

审稿时长

10 weeks

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: