Microsaccade-inspired event camera for robotics

IF 26.1

1区 计算机科学

Q1 ROBOTICS

引用次数: 0

Abstract

Neuromorphic vision sensors or event cameras have made the visual perception of extremely low reaction time possible, opening new avenues for high-dynamic robotics applications. These event cameras’ output is dependent on both motion and texture. However, the event camera fails to capture object edges that are parallel to the camera motion. This is a problem intrinsic to the sensor and therefore challenging to solve algorithmically. Human vision deals with perceptual fading using the active mechanism of small involuntary eye movements, the most prominent ones called microsaccades. By moving the eyes constantly and slightly during fixation, microsaccades can substantially maintain texture stability and persistence. Inspired by microsaccades, we designed an event-based perception system capable of simultaneously maintaining low reaction time and stable texture. In this design, a rotating wedge prism was mounted in front of the aperture of an event camera to redirect light and trigger events. The geometrical optics of the rotating wedge prism allows for algorithmic compensation of the additional rotational motion, resulting in a stable texture appearance and high informational output independent of external motion. The hardware device and software solution are integrated into a system, which we call artificial microsaccade–enhanced event camera (AMI-EV). Benchmark comparisons validated the superior data quality of AMI-EV recordings in scenarios where both standard cameras and event cameras fail to deliver. Various real-world experiments demonstrated the potential of the system to facilitate robotics perception both for low-level and high-level vision tasks.

用于机器人技术的微积水事件相机。

神经形态视觉传感器或事件相机使反应时间极短的视觉感知成为可能,为高动态机器人应用开辟了新途径。这些事件相机的输出取决于运动和纹理。然而,事件摄像机无法捕捉到与摄像机运动平行的物体边缘。这是传感器固有的问题,因此在算法上具有挑战性。人类视觉利用眼球的微小不自主运动这一主动机制来处理知觉消退问题,其中最突出的称为 "眼球微动"(microsaccades)。通过在凝视过程中不断轻微地移动眼球,微注视可以极大地保持纹理的稳定性和持久性。受微注视的启发,我们设计了一种基于事件的感知系统,能够同时保持较低的反应时间和稳定的纹理。在这一设计中,一个旋转楔形棱镜被安装在事件相机的光圈前,以改变光线方向并触发事件。旋转楔形棱镜的几何光学特性允许对额外的旋转运动进行算法补偿,从而获得稳定的纹理外观和独立于外部运动的高信息输出。硬件设备和软件解决方案被集成到一个系统中,我们称之为人工微倒影增强事件相机(AMI-EV)。基准比较验证了 AMI-EV 在标准摄像机和事件摄像机都无法实现的场景中记录的数据质量上乘。各种真实世界实验证明了该系统在促进机器人感知低级和高级视觉任务方面的潜力。

本文章由计算机程序翻译,如有差异,请以英文原文为准。

求助全文

约1分钟内获得全文

求助全文

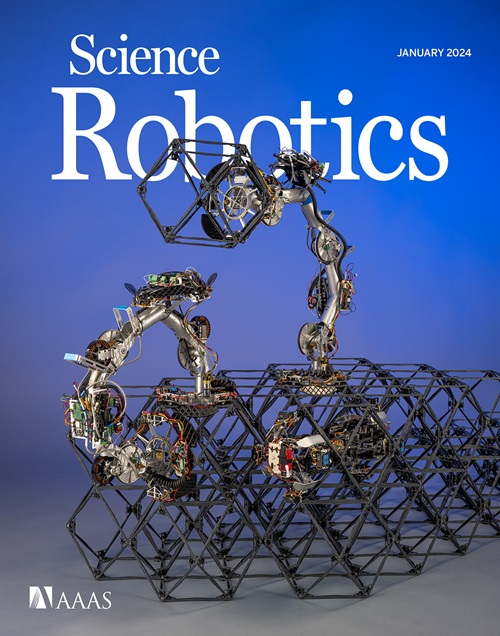

来源期刊

Science Robotics

Mathematics-Control and Optimization

CiteScore

30.60

自引率

2.80%

发文量

83

期刊介绍:

Science Robotics publishes original, peer-reviewed, science- or engineering-based research articles that advance the field of robotics. The journal also features editor-commissioned Reviews. An international team of academic editors holds Science Robotics articles to the same high-quality standard that is the hallmark of the Science family of journals.

Sub-topics include: actuators, advanced materials, artificial Intelligence, autonomous vehicles, bio-inspired design, exoskeletons, fabrication, field robotics, human-robot interaction, humanoids, industrial robotics, kinematics, machine learning, material science, medical technology, motion planning and control, micro- and nano-robotics, multi-robot control, sensors, service robotics, social and ethical issues, soft robotics, and space, planetary and undersea exploration.

求助内容:

求助内容: 应助结果提醒方式:

应助结果提醒方式: